276 KiB

- Custom Pages

- Pages

- Posts

- Backup

- Configuration Management

- Container

- K3s

- Kubernetes

- MISC

- Revision Control

- RSS

- IRC

- Text Editors

- Footnotes

- COMMENT Local Variables

Custom Pages

Pages

About

Who am I ?

I am a DevOps cloud engineer with a passion for technology, automation, Linux and OpenSource. I've been on Linux since the early 2000's and have contributed, in some small capacity, to some open source projects along the way.

I dabble in this space and I blog about it. This is how I learn, this is how I evolve.

Contact Me

If, for some reason, you'd like to get in touch you have sevaral options.

- Find me on libera in

#LearnAndTeach. - Email me at

blog[at]lazkani[dot]io

If you use GPG and you should, my public key is 2383 8945 E07E 670A 4BFE 39E6 FBD8 1F2B 1F48 8C2B

FAQ

What is this ?

This is my humble blog where I post things related to DevOps in hope that I or someone else might benefit from it.

Wait what ? What is DevOps ?

Duckduckgo defines DevOps as:

DevOps is a software engineering culture and practice that aims at unifying software development and software operation. The main characteristic of the DevOps movement is to strongly advocate automation and monitoring at all steps of software construction, from integration, testing, releasing to deployment and infrastructure management. DevOps aims at shorter development cycles, increased deployment frequency, and more dependable releases, in close alignment with business objectives.

In short, we build an infrastructure that is easily deployable, maintainable and, in all forms, makes the lives of the developers a breeze.

What do you blog about ?

Anything and everything related to DevOps. The field is very big and complex with a lot of different tools and technologies implemented.

I try to blog about interesting and new things as much as possible, when time permits.

Does this blog have RSS ?

Yup, here's the link.

Posts

Backup @backup

DONE BorgBackup borg borgbackup

I usually lurk around Freenode in a few projects that I use, can learn from and/or help with. This is a great opportunity to learn new things all the time.

This story is familiar in that manner, but that's where similarities diverge. Someone asked around #Weechat a question that caught my attention because it was, sort of, out of topic. The question was around how do you backup your stuff ?

I mean if I were asked that, I would've mentioned revision controlled off-site repositories for the code that I have.

For the personal stuff on the other hand, I would've admitted simple rudimentary solutions like rsync, tar and external drives.

So I was sort of happy with my backup solution, it has worked. Plain and simple.

I have to admit that, by modern standards it might not offer the ability to go back in time to a certain point. But I use file systems that offer snapshot capabilities. I can recover from previous snapshots and send them somewhere safe. Archiving and encrypting those is not a simple process, wish it was. That limits storage possibilities if you care to keep your data private.

But if you know me, you'd know that I'm always open to new ways of doing things.

I can't remember exactly the conversation but the name BorgBackup was mentioned (thank you however you are). That's when things changed.

BorgBackup

Borg is defined as a

Deduplicating archiver with compression and encryption

Although this is a very accurate and encompassing definition, it doesn't really show you how AWESOME this thing is.

I had to go to the docs first before I stumbled upon this video.

It can be a bit difficult to follow the video, I understand.

This is why I decided to write this post, to sort of explain to you how Borg can backup your stuff.

Encryption

Oh yeah, that's the first thing I look at when I consider any suggested backup solution. Borg offers built-in encryption and authentication. You can read about it in details in the docs.

So that's a check.

Compression

This is another thing I look for in a suggested backup solution. And I'm happy to report that Borg has this under the belt as well. Borg currently supports LZ4, zlib, LZMA and zstd. You can also tune the level of compression. Pretty neat !

Full Backup

I've watched a few videos and read a bit of their documentation and they talk about FULL BACKUP. Which means every time you run Borg, it will take a full backup of your stuff. A full backup at that point in time, don't forget. The implication of this is that you have a versioned list of your backups, and you can go back in time to any of them.

Yes, you read that right. Borg does a full backup every time you run it. That's a pretty neat feature.

If you're a bit ahead of me, you were gonna say woooow there bud ! I have Gigabytes of data, what do you mean FULL BACKUP, you keep saying FULL BACKUP.

I mean FULL BACKUP, wait until you hear about the next feature.

Deduplication

Booyah ! It has deduplication. Ain't that awesome. I've watched a presentation by the project's original maintainer explain this. I have one thing to say. It's pretty good. How good, you may ask ?

My answer would be, good enough to fool me into thinking that it was taking snapshots of my data.

-----------------------------------------------------------------------------

Original size Compressed size Deduplicated size

All archives: 34.59 GB 9.63 GB 1.28 GB

Unique chunks Total chunks

Chunk index: 47772 469277

It wasn't until I dug in deeper into the matter that I understood that it was a full backup and the deduping taking care of the rest.

Check

Borg offers a way to vefiry the consistency of the repository and the archives within. This way, you can make sure that your backups haven't been corrupted.

This is a very good feature, and a must in my opinion from a backup solution. Borg has YOU covered.

Restore

A backup solution is nothing if you can't get your data backup. Borg has a few ways for you to get your data. You can either create an archive file out of a backup. You can export a file, a directory or the whole directory tree from a backup. You can also, if you like, mount a backup and get stuff out.

warning

Mounting a Borg backup is done using fuse

Conclusion

Borg is a great tool for backup. It comes in an easily installable self-contained binary so you can use it, pretty much, anywhere giving you no excuse whatsoever not to use it. Their documentation is very good, and Borg is easy to use. It offers you all the features you need to do off-site and on-site backups of all your important data.

I'll be testing Borg moving forward for my data. I'll make sure to report back anything I find, in the future, related to the subject.

DONE Automating Borg borgmatic borgbackup borg

In the previous blog post entitle #borgbackup, I talked about borg. If you read that post, you would've noticed that borg has a lot of features. With a lot of features come a lot of automation.

If you were thinking about using borg, you should either make a simple cron or you're gonna have to write an elaborate script to take care of all the different steps.

What if I told you there's another way ? An easier way ! The Borgmatic way… What would you say ?

Borgmatic

Borgmatic is defined on their website as follows.

borgmatic is simple, configuration-driven backup software for servers and workstations. Protect your files with client-side encryption. Backup your databases too. Monitor it all with integrated third-party services.

If you go down to it, borgmatic uses borg's API to automate a list of configurable tasks. This way, it saves you the trouble of writing your own scripts to automate these steps.

Borgmatic uses a YAML configuration file. Let's configure a few tasks.

Location

First, let's start by configuring the locations that borg is going to be working with.

location:

source_directories:

- /home/

repositories:

- user@backupserver:sourcehostname.borg

one_file_system: true

exclude_patterns:

- /home/*/.cache

- '*.pyc'

This tells borg that we need to backup our /home directories excluding a few patterns.

Let's not forget that we told borg where the repository is located at.

Storage

We need to configure the storage next.

storage:

# Recommended

# encryption_passcommand: secret-tool lookup borg-repository repo-name

encryption_passphrase: "ReallyStrongPassphrase"

compression: zstd,15

ssh_command: ssh -i /path/to/private/key

borg_security_directory: /path/to/base/config/security

archive_name_format: 'borgmatic-{hostname}-{now}'In this section, we tell borg a little big of information about our repository. What are the credentials, where it can find them, etc.

The easy way is to go with a passphrase, but I recommend using an encryption_passcommand instead.

I also use zstd for encryption instead of lz4, you better do your research before you change the default.

I also recommend, just as they do, the use of a security directory as well.

Retention

We can configure a retention for our backups, if we like.

retention:

keep_hourly: 7

keep_daily: 7

keep_weekly: 4

keep_monthly: 6

keep_yearly: 2

prefix: "borgmatic-"

The part of what to keep from hourly to daily is self explanatory.

I would like to point out the prefix part as it is important.

This is the prefix that borgmatic uses to consider backups for pruning.

warning

Watch out for the retention prefix

Consistency

After the updates, we'd like to check our backups.

consistency:

checks:

- repository

- archives

check_last: 3

prefix: "borgmatic-"warning

Watch out, again, for the consistency prefix

Hooks

Finally, hooks.

I'm going to talk about hooks a bit. Hooks can be used to backup MySQL, PostgreSQL or MariaDB.

They can also be hooks for on_error, before_backup, after_backup, before_everything and after_everything.

You can also hook to third party services which you can check on their webpage.

I deployed my own, so I configured my own.

Borgmatic Configuration

Let's put everything together now.

location:

source_directories:

- /home/

repositories:

- user@backupserver:sourcehostname.borg

one_file_system: true

exclude_patterns:

- /home/*/.cache

- '*.pyc'

storage:

# Recommended

# encryption_passcommand: secret-tool lookup borg-repository repo-name

encryption_passphrase: "ReallyStrongPassphrase"

compression: zstd,15

ssh_command: ssh -i /path/to/private/key

borg_security_directory: /path/to/base/config/security

archive_name_format: 'borgmatic-{hostname}-{now}'

retention:

keep_hourly: 7

keep_daily: 7

keep_weekly: 4

keep_monthly: 6

keep_yearly: 2

prefix: "borgmatic-"

consistency:

checks:

- repository

- archives

check_last: 3

prefix: "borgmatic-"

Now that we have everything together, let's save it in /etc/borgmatic.d/home.yaml.

Usage

If you have borg and borgmatic already installed on your system and the borgmatic configuration file in place, you can test it out.

You can create the repository.

# borgmatic init -v 2

You can list the backups for the repository.

# borgmatic list --last 5 borgmatic-home-2020-01-30T22:01:30 Thu, 2020-01-30 22:01:42 [0000000000000000000000000000000000000000000000000000000000000000] borgmatic-home-2020-01-31T22:02:12 Fri, 2020-01-31 22:02:24 [0000000000000000000000000000000000000000000000000000000000000000] borgmatic-home-2020-02-01T22:01:34 Sat, 2020-02-01 22:01:45 [0000000000000000000000000000000000000000000000000000000000000000] borgmatic-home-2020-02-02T16:01:22 Sun, 2020-02-02 16:01:32 [0000000000000000000000000000000000000000000000000000000000000000] borgmatic-home-2020-02-02T18:01:36 Sun, 2020-02-02 18:01:47 [0000000000000000000000000000000000000000000000000000000000000000]

You could run a check.

# borgmatic check -v 1 /etc/borgmatic.d/home.yaml: Pinging Healthchecks start /borg/home: Running consistency checks Remote: Starting repository check Remote: Starting repository index check Remote: Completed repository check, no problems found. Starting archive consistency check... Analyzing archive borgmatic-home-2020-02-01T22:01:34 (1/3) Analyzing archive borgmatic-home-2020-02-02T16:01:22 (2/3) Analyzing archive borgmatic-home-2020-02-02T18:01:36 (3/3) Orphaned objects check skipped (needs all archives checked). Archive consistency check complete, no problems found. summary: /etc/borgmatic.d/home.yaml: Successfully ran configuration file

But most of all, if you simply run borgmatic without any parameters, it will run through the whole configuration and apply all the steps.

At this point, you can simply add the borgmatic command in a cron to run on an interval.

The other options would be to configure a systemd timer and service to run this on an interval.

The latter is usually provided to you if you used your package manager to install borgmatic.

Conclusion

If you've checked borg and found it too much work to script, give borgmatic a try. I've been using borgmatic for few weeks now with no issues at all. I recently hooked it to a monitoring system so I will have a better view on when it runs, how much time each run takes. Also, if any of my backups fail I get notified by email. I hope you enjoy borg and borgmatic as much as I am.

DONE Dotfiles with Chezmoi dotfiles chezmoi encryption templates

A few months ago, I went on a search for a solution for my dotfiles.

I tried projects likes GNU Stow, dotbot and a bare git repository. Each one of these solutions has its advantages and its advantages, but I found mine in Chezmoi.

Chezmoi ? That's French right ? How is learning French going to help me ?

Introduction

On a *nix system, whether Linux, BSD or even Mac OS now, the applications one uses have their configuration saved in the user's home directory. These files are called configuration files. Usually, these configuration files start with a . which on these systems designate hidden files (they do not show up with a simple ls). Due their names, these configuration files are also referred to as dotfiles.

Note

I will be using dotfiles and configuration files interchangeably in this article, and they can be thought as such.

One example of such files is the .bashrc file found in the user's home directory. It allows the user to configure bash and change some behaviours.

Now that we understand what dotfiles are, let's talk a little bit about the previously mentioned solutions. They deserve mentioning, especially if you're looking for such solution.

GNU Stow

GNU Stow leverages the power of symlinks to keep your configuration in a centralized location. Wherever your repository lives, GNU Stow will mimic the internal structure of said repository in your home directory by smartly symlinking everything.

I said smartly because it tries to minimize the amount of symlinks created by symlinking to common root directories if possible.

By having all your configuration files under one directory structure, it is easier to push it to any public repository and share it with others.

The downsize is, you end-up with a lot of symlinks. It is also worth mentioning that not all applications behave well when their configuration directories are symlinked. Otherwise, GNU Stow is a great project.

Dotbot

Dotbot is a Python project that aims at automating your dotfiles. It gives you great control over what and how to manage your dotfiles.

Having it written in Python means it is very easy to install; pip. It also means that it should be easy to migrate it to different systems.

Dotbot has a lot going for it. If the idea of having control over every aspect of your dotfiles, including the possibility of the setup of the environment along with it, then dotbot is for you.

Well, it's not for me.

Bare Git Repository

This is arguably the most elegant solution of them all.

The nice thing about this solution is its simplicity and cleanliness. It is essentially creating a bare git repository somewhere in your home directory specifying the home directory itself to be the working directory.

If you are wondering where one would use a bare git repository in real life other than this use case. Well, you have no other place to turn than any git server. On the server, Gitea for example, your repository is only a bare repository. One has to clone it to get the working directory along with it.

Anyway, back to our topic. This is a great solution if you don't have to worry about things you would like to hide.

By hide, I mean things like credentials, keys or passwords which never belong in a repository. You will need to find solutions for these types of files. I was looking for something less involving and more involved.

Chezmoi to the rescue ?

Isn't that what they all say ?

I like how the creator(s) defines Chezmoi

Manage your dotfiles across multiple machines, securely.

Pretty basic, straight to the point. Unfortunately, it's a little bit harder to grasp the concept of how it works.

Chezmoi basically generates the dotfiles from the local repository. These dotfiles are saved in different forms in the repository but they always generate the same output; the dotfiles. Think of Chezmoi as a dotfiles templating engine, at its basic form it saves your dotfiles as is and deploys them in any machine.

Working with Chezmoi

I think we should take a quick look at Chezmoi to see how it works.

Chezmoi is written Golang making it fairly easy to install so I will forgo that boring part.

First run

To start using Chezmoi, one has to initialize a new Chezmoi repository.

chezmoi init

This will create a new git repository in ~/.local/share/chezmoi. This is now the source state, where Chezmoi will get your dotfiles.

Plain dotfiles management with Chezmoi

Now that we have a Chezmoi repository. We can start to populate it with dotfiles.

Let's assume that we would like to start managing one of our dotfiles with Chezmoi. I'm going with an imaginary application's configuration directory. This directory will hold different files with versatile content types. This is going to showcase some of Chezmoi's capabilities.

Note

This is how I use Chezmoi. If you have a better way to do things, I'd like to hear about it!

This DS9 application has its directory configuration in ~/.ds9/ where we find the config.

The configuration looks like any generic ini configuration.

[character/sisko]

Name = Benjamin

Rank = Captain

Credentials = sisko-creds.cred

Mastodon = sisko-api.mastodonNothing special about this file, let's add it to Chezmoi

chezmoi add ~/.ds9/configAnd nothing happened… Hmm…

chezmoi managed/home/user/.ds9 /home/user/.ds9/config

Okay, it seems that it is being managed.

We can test it out by doing something like this.

mv ~/.ds9/config ~/.ds9/config.old

chezmoi diffinstall -m 644 /dev/null /home/user/.ds9/config --- a/home/user/.ds9/config +++ b/home/user/.ds9/config @@ -0,0 +1,5 @@ +[character/sisko] +Name = Benjamin +Rank = Captain +Credentials = sisko-creds.cred +Mastodon = sisko-api.mastodon

Alright, everything looks as it should be.

But that's only a diff, how do I make Chezmoi apply the changes because my dotfile is still config.old.

Okay, we can actually get rid of the config.old file and make Chezmoi regenerate the configuration.

rm ~/.ds9/config ~/.ds9/config.old

chezmoi -v applyNote

I like to use the -v flag to check what is actually being applied.

install -m 644 /dev/null /home/user/.ds9/config --- a/home/user/.ds9/config +++ b/home/user/.ds9/config @@ -0,0 +1,5 @@ +[character/sisko] +Name = Benjamin +Rank = Captain +Credentials = sisko-creds.cred +Mastodon = sisko-api.mastodon

And we get the same output as the diff. Nice!

The configuration file was also recreated, that's awesome.

If you've followed so far, you might have wondered… If I edit ~/.ds9/config, then Chezmoi is going to override it!

YES, yes it will.

warning

Always use Chezmoi to edit your managed dotfiles. Do NOT edit them directly.

ALWAYS use chezmoi diff before every applying.

To edit your managed dotfile, simply tell Chezmoi about it.

chezmoi edit ~/.ds9/config

Chezmoi will use your $EDITOR to open the file for you to edit. Once saved, it's saved in the repository database.

Be aware, at this point the changes are not reflected in your home directory, only in the Chezmoi source state. Make sure you diff and then apply to make the changes in your home.

Chezmoi repository management

As mentioned previously, the repository is found in ~/.local/share/chezmoi.

I always forget where it is, luckily Chezmoi has a solution for that.

chezmoi cd

Now, we are in the repository. We can work with it as a regultar git repository.

When you're done, don't forget to exit.

Other features

It is worth mentioning at this point that Chezmoi offers a few more integrations.

Due to the fact that Chezmoi is written in Golang, it can leverage the power of the Golang templating system.

One can replace repeatable values like email or name with a template like {{ .email }} or {{ .name }}.

This will result in a replacement of these templated variables with their real values in the resulting dotfile. This is another reason why you should always edit your managed dotfiles through Chezmoi.

Our previous example would look a bit different.

[character/sisko]

Name = {{ .sisko.name }}

Rank = {{ .sisko.rank }}

Credentials = sisko-creds.cred

Mastodon = sisko-api.mastodonAnd we would add it a bit differently now.

chezmoi add --template ~/.ds9/configwarning

Follow the documentation to configure the values.

Once you have the power of templating on your side, you can always take it one step further. Chezmoi has integration with a big list of password managers. These can be used directly into the configuration files.

In our hypothetical example, we can think of the credentials file (~/.ds9/sisko-creds.cred).

Name = {{ (keepassxc "sisko.ds9").Name }}

Rank = {{ (keepassxc "sisko.ds9").Rank }}

Access_Code = {{ (keepassxc "sisko.ds9").AccessCode }}Do not forget that this is also using the templating engine. So you need to add as a template.

chezmoi add --template ~/.ds9/sisko-creds.credWait, what ! You almost slipped away right there old fellow.

We have our Mastodon API key in the sisko-api.mastodon file. The whole file cannot be pushed to a repository.

It turns out that Chezmoi can use gpg to encrypt your files making it possible for you to push them.

To add a file encrypted to the Chezmoi repository, use the following command.

chezmoi add --encrypt ~/.ds9/sisko-api.mastodonThere is a list of other features that Chezmoi supports that I did not mention. I did not use all the features offered yet. You should check the website for the full documentation.

Conclusion

I am fully migrated into Chezmoi so far. I have used all the features above, and it has worked flawlessly so far.

I like the idea that it offers all the features I need while at the same time staying out of the way. I find myself, often, editing the dotfiles in my home directory as a dev version. Once I get to a configuration I like, I add it to Chezmoi. If I ever mess up badly, I ask Chezmoi to override my changes.

I understand it adds a little bit of overhead with the use of chezmoi commands, which I aliased to cm. But the end result is a home directory which seems untouched by any tools (no symlinks, no copies, etc…) making it easier to migrate out of Chezmoi as a solution and into another one if I ever choose in the future.

Configuration Management @configuration_management

DONE Ansible testing with Molecule ansible molecule

When I first started using ansible, I did not know about molecule. It was a bit daunting to start a role from scratch and trying to develop it without having the ability to test it. Then a co-worker of mine told me about molecule and everything changed.

I do not have any of the tools I need installed on this machine, so I will go through, step by step, how I set up ansible and molecule on any new machine I come across for writing ansible roles.

Requirements

What we are trying to achieve in this post, is a working ansible role that can be tested inside a docker container. To be able to achieve that, we need to install docker on the system. Follow the instructions on installing docker found on the docker website.

Good Practices

First thing's first. Let's start by making sure that we have python installed properly on the system.

$ python --version Python 3.7.1

Because in this case I have python3 installed, I can create a virtualenv easier without the use of external tools.

# Create the directory to work with $ mkdir -p sandbox/test-roles # Navigate to the directory $ cd sandbox/test-roles/ # Create the virtualenv ~/sandbox/test-roles $ python -m venv .ansible-venv # Activate the virtualenv ~/sandbox/test-roles $ source .ansible-venv/bin/activate # Check that your virtualenv activated properly (.ansible-venv) ~/sandbox/test-roles $ which python /home/elijah/sandbox/test-roles/.ansible-venv/bin/python

At this point, we can install the required dependencies.

$ pip install ansible molecule docker

Collecting ansible

Downloading https://files.pythonhosted.org/packages/56/fb/b661ae256c5e4a5c42859860f59f9a1a0b82fbc481306b30e3c5159d519d/ansible-2.7.5.tar.gz (11.8MB)

100% |████████████████████████████████| 11.8MB 3.8MB/s

Collecting molecule

Downloading https://files.pythonhosted.org/packages/84/97/e5764079cb7942d0fa68b832cb9948274abb42b72d9b7fe4a214e7943786/molecule-2.19.0-py3-none-any.whl (180kB)

100% |████████████████████████████████| 184kB 2.2MB/s

...

Successfully built ansible ansible-lint anyconfig cerberus psutil click-completion tabulate tree-format pathspec future pycparser arrow

Installing collected packages: MarkupSafe, jinja2, PyYAML, six, pycparser, cffi, pynacl, idna, asn1crypto, cryptography, bcrypt, paramiko, ansible, pbr, git-url-parse, monotonic, fasteners, click, colorama, sh, python-gilt, ansible-lint, pathspec, yamllint, anyconfig, cerberus, psutil, more-itertools, py, attrs, pluggy, atomicwrites, pytest, testinfra, ptyprocess, pexpect, click-completion, tabulate, future, chardet, binaryornot, poyo, urllib3, certifi, requests, python-dateutil, arrow, jinja2-time, whichcraft, cookiecutter, tree-format, molecule, docker-pycreds, websocket-client, docker

Successfully installed MarkupSafe-1.1.0 PyYAML-3.13 ansible-2.7.5 ansible-lint-3.4.23 anyconfig-0.9.7 arrow-0.13.0 asn1crypto-0.24.0 atomicwrites-1.2.1 attrs-18.2.0 bcrypt-3.1.5 binaryornot-0.4.4 cerberus-1.2 certifi-2018.11.29 cffi-1.11.5 chardet-3.0.4 click-6.7 click-completion-0.3.1 colorama-0.3.9 cookiecutter-1.6.0 cryptography-2.4.2 docker-3.7.0 docker-pycreds-0.4.0 fasteners-0.14.1 future-0.17.1 git-url-parse-1.1.0 idna-2.8 jinja2-2.10 jinja2-time-0.2.0 molecule-2.19.0 monotonic-1.5 more-itertools-5.0.0 paramiko-2.4.2 pathspec-0.5.9 pbr-4.1.0 pexpect-4.6.0 pluggy-0.8.1 poyo-0.4.2 psutil-5.4.6 ptyprocess-0.6.0 py-1.7.0 pycparser-2.19 pynacl-1.3.0 pytest-4.1.0 python-dateutil-2.7.5 python-gilt-1.2.1 requests-2.21.0 sh-1.12.14 six-1.11.0 tabulate-0.8.2 testinfra-1.16.0 tree-format-0.1.2 urllib3-1.24.1 websocket-client-0.54.0 whichcraft-0.5.2 yamllint-1.11.1

Creating your first ansible role

Once all the steps above are complete, we can start by creating our first ansible role.

$ molecule init role -r example-role

--> Initializing new role example-role...

Initialized role in /home/elijah/sandbox/test-roles/example-role successfully.

$ tree example-role/

example-role/

├── defaults

│ └── main.yml

├── handlers

│ └── main.yml

├── meta

│ └── main.yml

├── molecule

│ └── default

│ ├── Dockerfile.j2

│ ├── INSTALL.rst

│ ├── molecule.yml

│ ├── playbook.yml

│ └── tests

│ ├── __pycache__

│ │ └── test_default.cpython-37.pyc

│ └── test_default.py

├── README.md

├── tasks

│ └── main.yml

└── vars

└── main.yml

9 directories, 12 files

You can find what each directory is for and how ansible works by visiting docs.ansible.com.

meta/main.yml

The meta file needs to modified and filled with information about the role. This is not a required file to modify if you are keeping this for yourself, for example. But it is a good idea to have as much information as possible if this is going to be released. In my case, I don't need any fanciness as this is just sample code.

---

galaxy_info:

author: Elia el Lazkani

description: This is an example ansible role to showcase molecule at work

license: license (BDS-2)

min_ansible_version: 2.7

galaxy_tags: []

dependencies: []

tasks/main.yml

This is where the magic is set in motion. Tasks are the smallest entities in a role that do small and idempotent actions. Let's write a few simple tasks to create a user and install a service.

---

# Create the user example

- name: Create 'example' user

user:

name: example

comment: Example user

shell: /bin/bash

state: present

create_home: yes

home: /home/example

# Install nginx

- name: Install nginx

apt:

name: nginx

state: present

update_cache: yes

notify: Restart nginx

handlers/main.yml

If you noticed, we are notifying a handler to be called after installing nginx. All handlers notified will run after all the tasks complete and each handler will only run once. This is a good way to make sure that you don't restart nginx multiple times if you call the handler more than once.

---

# Handler to restart nginx

- name: Restart nginx

service:

name: nginx

state: restarted

molecule/default/molecule.yml

It's time to configure molecule to do what we need. We need to start an ubuntu docker container, so we need to specify that in the molecule YAML file. All we need to do is change the image line to specify that we want an ubuntu:bionic image.

---

dependency:

name: galaxy

driver:

name: docker

lint:

name: yamllint

platforms:

- name: instance

image: ubuntu:bionic

provisioner:

name: ansible

lint:

name: ansible-lint

scenario:

name: default

verifier:

name: testinfra

lint:

name: flake8

molecule/default/playbook.yml

This is the playbook that molecule will run. Make sure that you have all the steps that you need here. I will keep this as is.

---

- name: Converge

hosts: all

roles:

- role: example-roleFirst Role Pass

This is time to test our role and see what's going on.

(.ansible-role) ~/sandbox/test-roles/example-role/ $ molecule converge

--> Validating schema /home/elijah/sandbox/test-roles/example-role/molecule/default/molecule.yml.

Validation completed successfully.

--> Test matrix

└── default

├── dependency

├── create

├── prepare

└── converge

--> Scenario: 'default'

--> Action: 'dependency'

Skipping, missing the requirements file.

--> Scenario: 'default'

--> Action: 'create'

PLAY [Create] ******************************************************************

TASK [Log into a Docker registry] **********************************************

skipping: [localhost] => (item=None)

TASK [Create Dockerfiles from image names] *************************************

changed: [localhost] => (item=None)

changed: [localhost]

TASK [Discover local Docker images] ********************************************

ok: [localhost] => (item=None)

ok: [localhost]

TASK [Build an Ansible compatible image] ***************************************

changed: [localhost] => (item=None)

changed: [localhost]

TASK [Create docker network(s)] ************************************************

TASK [Create molecule instance(s)] *********************************************

changed: [localhost] => (item=None)

changed: [localhost]

TASK [Wait for instance(s) creation to complete] *******************************

changed: [localhost] => (item=None)

changed: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=5 changed=4 unreachable=0 failed=0

--> Scenario: 'default'

--> Action: 'prepare'

Skipping, prepare playbook not configured.

--> Scenario: 'default'

--> Action: 'converge'

PLAY [Converge] ****************************************************************

TASK [Gathering Facts] *********************************************************

ok: [instance]

TASK [example-role : Create 'example' user] ************************************

changed: [instance]

TASK [example-role : Install nginx] ********************************************

changed: [instance]

RUNNING HANDLER [example-role : Restart nginx] *********************************

changed: [instance]

PLAY RECAP *********************************************************************

instance : ok=4 changed=3 unreachable=0 failed=0

It looks like the converge step succeeded.

Writing Tests

It is always a good practice to write unittests when you're writing code. Ansible roles should not be an exception. Molecule offers a way to run tests, which you can think of as unittest, to make sure that what the role gives you is what you were expecting. This helps future development of the role and keeps you from falling in previously solved traps.

molecule/default/tests/test_default.py

Molecule leverages the testinfra project to run its tests. You can use other tools if you so wish, and there are many. In this example we will be using testinfra.

import os

import testinfra.utils.ansible_runner

testinfra_hosts = testinfra.utils.ansible_runner.AnsibleRunner(

os.environ['MOLECULE_INVENTORY_FILE']).get_hosts('all')

def test_hosts_file(host):

f = host.file('/etc/hosts')

assert f.exists

assert f.user == 'root'

assert f.group == 'root'

def test_user_created(host):

user = host.user("example")

assert user.name == "example"

assert user.home == "/home/example"

def test_user_home_exists(host):

user_home = host.file("/home/example")

assert user_home.exists

assert user_home.is_directory

def test_nginx_is_installed(host):

nginx = host.package("nginx")

assert nginx.is_installed

def test_nginx_running_and_enabled(host):

nginx = host.service("nginx")

assert nginx.is_runningwarning

Uncomment truthy: disable in .yamllint found at the base of the role.

(.ansible_venv) ~/sandbox/test-roles/example-role $ molecule test

--> Validating schema /home/elijah/sandbox/test-roles/example-role/molecule/default/molecule.yml.

Validation completed successfully.

--> Test matrix

└── default

├── lint

├── destroy

├── dependency

├── syntax

├── create

├── prepare

├── converge

├── idempotence

├── side_effect

├── verify

└── destroy

--> Scenario: 'default'

--> Action: 'lint'

--> Executing Yamllint on files found in /home/elijah/sandbox/test-roles/example-role/...

Lint completed successfully.

--> Executing Flake8 on files found in /home/elijah/sandbox/test-roles/example-role/molecule/default/tests/...

/home/elijah/.virtualenvs/world/lib/python3.7/site-packages/pycodestyle.py:113: FutureWarning: Possible nested set at position 1

EXTRANEOUS_WHITESPACE_REGEX = re.compile(r'[[({] | []}),;:]')

Lint completed successfully.

--> Executing Ansible Lint on /home/elijah/sandbox/test-roles/example-role/molecule/default/playbook.yml...

Lint completed successfully.

--> Scenario: 'default'

--> Action: 'destroy'

PLAY [Destroy] *****************************************************************

TASK [Destroy molecule instance(s)] ********************************************

changed: [localhost] => (item=None)

changed: [localhost]

TASK [Wait for instance(s) deletion to complete] *******************************

ok: [localhost] => (item=None)

ok: [localhost]

TASK [Delete docker network(s)] ************************************************

PLAY RECAP *********************************************************************

localhost : ok=2 changed=1 unreachable=0 failed=0

--> Scenario: 'default'

--> Action: 'dependency'

Skipping, missing the requirements file.

--> Scenario: 'default'

--> Action: 'syntax'

playbook: /home/elijah/sandbox/test-roles/example-role/molecule/default/playbook.yml

--> Scenario: 'default'

--> Action: 'create'

PLAY [Create] ******************************************************************

TASK [Log into a Docker registry] **********************************************

skipping: [localhost] => (item=None)

TASK [Create Dockerfiles from image names] *************************************

changed: [localhost] => (item=None)

changed: [localhost]

TASK [Discover local Docker images] ********************************************

ok: [localhost] => (item=None)

ok: [localhost]

TASK [Build an Ansible compatible image] ***************************************

changed: [localhost] => (item=None)

changed: [localhost]

TASK [Create docker network(s)] ************************************************

TASK [Create molecule instance(s)] *********************************************

changed: [localhost] => (item=None)

changed: [localhost]

TASK [Wait for instance(s) creation to complete] *******************************

changed: [localhost] => (item=None)

changed: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=5 changed=4 unreachable=0 failed=0

--> Scenario: 'default'

--> Action: 'prepare'

Skipping, prepare playbook not configured.

--> Scenario: 'default'

--> Action: 'converge'

PLAY [Converge] ****************************************************************

TASK [Gathering Facts] *********************************************************

ok: [instance]

TASK [example-role : Create 'example' user] ************************************

changed: [instance]

TASK [example-role : Install nginx] ********************************************

changed: [instance]

RUNNING HANDLER [example-role : Restart nginx] *********************************

changed: [instance]

PLAY RECAP *********************************************************************

instance : ok=4 changed=3 unreachable=0 failed=0

--> Scenario: 'default'

--> Action: 'idempotence'

Idempotence completed successfully.

--> Scenario: 'default'

--> Action: 'side_effect'

Skipping, side effect playbook not configured.

--> Scenario: 'default'

--> Action: 'verify'

--> Executing Testinfra tests found in /home/elijah/sandbox/test-roles/example-role/molecule/default/tests/...

============================= test session starts ==============================

platform linux -- Python 3.7.1, pytest-4.1.0, py-1.7.0, pluggy-0.8.1

rootdir: /home/elijah/sandbox/test-roles/example-role/molecule/default, inifile:

plugins: testinfra-1.16.0

collected 5 items

tests/test_default.py ..... [100%]

=============================== warnings summary ===============================

...

==================== 5 passed, 7 warnings in 27.37 seconds =====================

Verifier completed successfully.

--> Scenario: 'default'

--> Action: 'destroy'

PLAY [Destroy] *****************************************************************

TASK [Destroy molecule instance(s)] ********************************************

changed: [localhost] => (item=None)

changed: [localhost]

TASK [Wait for instance(s) deletion to complete] *******************************

changed: [localhost] => (item=None)

changed: [localhost]

TASK [Delete docker network(s)] ************************************************

PLAY RECAP *********************************************************************

localhost : ok=2 changed=2 unreachable=0 failed=0

I have a few warning messages (that's likely because I am using python 3.7 and some of the libraries still don't fully support the new standards released with it) but all my tests passed.

Conclusion

Molecule is a great tool to test ansible roles quickly and while developing them. It also comes bundled with a bunch of other features from different projects that will test all aspects of your ansible code. I suggest you start using it when writing new ansible roles.

Container @container

DONE Linux Containers linux kernel docker podman dockerfile

Our story dates all the way back to 2006, believe it or not. The first steps were taken towards what we know today as containers. We'll discuss their history, how to build them and how to use them. Stick around! you might enjoy the ride.

History

2006-2007 - The Generic Process Containers lands in Linux

This was renamed thereafter to Control Groups, popularily known as cgroups, and landed in Linux version 2.6.24.

Cgroups are the first piece of the puzzle in Linux Containers. We will be talking about cgroups in detail later.

2008 - Namespaces

Even though namespaces have been around since 2002, Linux version 2.4.19, they saw a rapid development beginning 2006 and into 2008.

namespaces are the other piece of the puzzle in Linux Containers. We will talk about namespaces in more details later.

2008 - LXC

LXC finally shows up!

LXC is the first form of containers on the Linux kernel. LXC combined both cgroups and namespaces to provide isolated environments; containers.

Note

It is worth mentioning that LXC runs a full operating system containers from an image. In other words, LXC containers are meant to run more than one process.

2013 - Docker

Docker offered a full set of tools for working with containers, making it easier than ever to work with them.

Docker containers are designed to only run the application process.

Unlike LXC, the PID 1 of a Docker container is excepted to be the application running in the contanier.

We will be discussing this topic in more detail later.

Concepts

cgroups

Let's find out ! Better yet, let's use the tools at our disposal to find out together…

Open a terminal and run the following command.

man 7 cgroups

This should open the man pages for cgroups.

Control groups, usually referred to as cgroups, are a Linux kernel feature which allow processes to be organized into hierarchical groups whose usage of various types of resources can then be limited and monitored. The kernel's cgroup interface is provided through a pseudo-filesystem called cgroupfs. Grouping is implemented in the core cgroup kernel code, while resource tracking and limits are implemented in a set of per-resource-type subsystems (memory, CPU, and so on).

This can all be simplified by explaining it in a different way.

Essentially, you can think of cgroups as a way for the kernel to limit what you can use.

This gives us the ability to give a container only 1 CPU out of the 4 available to the kernel. Or maybe, limit the memory allowed to 512MB to the container. This way the container cannot overload the resources of the system in case they run a fork-bomb, for example.

But, cgroups do not limit what we can "see".

namespaces

As we did before, let's check the man page for namespaces

man 7 namespacesA namespace wraps a global system resource in an abstraction that makes it appear to the processes within the namespace that they have their own isolated instance of the global resource. Changes to the global resource are visible to other processes that are members of the namespace, but are invisible to other processes. One use of namespaces is to implement containers.

Wooow ! That's more mumbo jumbo ?!

Let's simplify this one as well.

You can think of namespaces as a way for the kernel to limit what we see.

There are multiple namespaces, like the cgroup_namespaces which virtualizes the view of a process cgroup.

In other words, inside the cgroup the process with PID 1 is not PID on the system.

The namespaces manual page lists them, you check them out for more details. But I hope you get the gist of it !

Linux Containers

We are finally here! Let's talk Linux Containers.

The first topic we need to know about is images.

We talked before that Docker came in and offered tooling around containers.

One of those concepts which they used, in docker images, is layers.

First of all, an image is a file-system representation of a container. It is an on-disk, read-only, image. It sort of looks like your Linux filesystem.

Then, layers on top to add functionality. You might ask, what are these layers. We will see them in action.

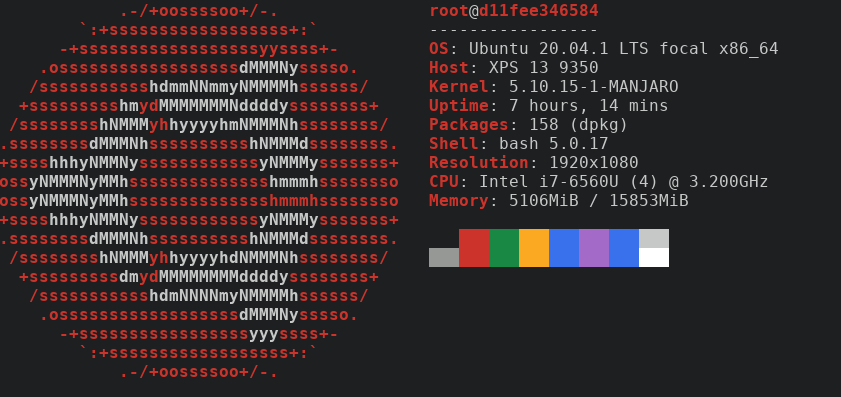

Let's look at my system.

lsb_release -aLSB Version: n/a Distributor ID: ManjaroLinux Description: Manjaro Linux Release: 20.2.1 Codename: Nibia

As you can see, I am running Manjaro. Keep that in mind.

Let's take a look at the kernel running on this machine.

uname -aLinux manjaro 5.10.15-1-MANJARO #1 SMP PREEMPT Wed Feb 10 10:42:47 UTC 2021 x86_64 GNU/Linux

So, it's kernel version 5.8.6. Remember this one as well.

I would like to test a tool called neofetch. Why ?

- First reason, I am not that creative.

- Second, it's a nice tool, you'll see.

We can test neofetch

neofetchfish: Unknown command: neofetch

Look at that! We don't have it installed… Not a big deal. We can download an image and test it inside.

Let's download a docker image. I am using podman, an open source project that allows us to use containers.

Note

You might want to run these commands with sudo privileges.

podman pull ubuntu:20.04f63181f19b2fe819156dcb068b3b5bc036820bec7014c5f77277cfa341d4cb5e

Let's pull an Ubuntu image.

As you can see, we have pulled an image from the repositories online. We can see further information about the image.

podman imagesREPOSITORY TAG IMAGE ID CREATED SIZE docker.io/library/ubuntu 20.04 f63181f19b2f 5 weeks ago 75.3 MB

Much better, now we can see that we have an Ubuntu image downloaded from docker.io.

A container is nothing more than an instance of an image. It is the running instance of an image.

Let's list our containers.

podman ps -aCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

We have none. Let's start one.

podman run -it ubuntu:20.04 uname -aLinux 57453b419a43 5.10.15-1-MANJARO #1 SMP PREEMPT Wed Feb 10 10:42:47 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

It's running the same kernel as our machine… Are we really inside a container ?

podman run -it ubuntu:20.04 hostname -f6795b85eeb50

okay ?! And our hostname is ?

hostname -fmanjaro

Hmm… They have different hostnames…

Let's see if it's really Ubuntu.

podman run -it ubuntu:20.04 bash -c 'apt-get update && apt-get install -y vim'Get:1 http://archive.ubuntu.com/ubuntu focal InRelease [265 kB] Get:2 http://archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB] Get:3 http://archive.ubuntu.com/ubuntu focal-backports InRelease [101 kB] Get:4 http://security.ubuntu.com/ubuntu focal-security InRelease [109 kB] Get:5 http://archive.ubuntu.com/ubuntu focal/restricted amd64 Packages [33.4 kB] Get:6 http://archive.ubuntu.com/ubuntu focal/multiverse amd64 Packages [177 kB] Get:7 http://archive.ubuntu.com/ubuntu focal/universe amd64 Packages [11.3 MB] ... Setting up libpython3.8:amd64 (3.8.5-1~20.04.2) ... Setting up vim (2:8.1.2269-1ubuntu5) ... update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/vim (vim) in auto mode update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/vimdiff (vimdiff) in auto mode update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/rvim (rvim) in auto mode update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/rview (rview) in auto mode update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/vi (vi) in auto mode ... update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/editor (editor) in auto mode ... Processing triggers for libc-bin (2.31-0ubuntu9.1) ...

This should not work on my Manjaro. apt-get is not a thing here.

Well, the output is a bit large so I truncated it a bit for readability but we seem to have installed vim successfully.

Now that we saw what an image is and what a container is. We can explore a bit inside a container to see it more clearly.

So, what can we do with containers? We can use the layering system and the docker created tooling to create them and distribute them.

Let's go back to our neofetch example.

I want to get an Ubuntu image, then install neofetch on it.

First step, create a Dockerfile in your current directory. It should look like this.

FROM ubuntu:20.04

RUN apt-get update && \

apt-get install -y neofetchThis file has two commands:

FROMdesignates the base image to use. This is the base image we will be building upon. In our case, we choseUbuntu:20.04. You can find the images on multiple platforms. To mention a few, we have Dockerhub, Quay.io and a few others. By default, this downloads from Dockerhub.RUNdesignates the commands to run. Pretty simple. We are running a couple of commands that should be very familiar to any user familiar with debian-based OS's.

Now that we have a Dockerfile, we can build the container.

podman build -t neofetch-ubuntu:20.04 -f Dockerfile.ubuntu .STEP 1: FROM ubuntu:20.04 STEP 2: RUN apt-get update && apt-get install -y neofetch Get:1 http://archive.ubuntu.com/ubuntu focal InRelease [265 kB] Get:2 http://security.ubuntu.com/ubuntu focal-security InRelease [109 kB] Get:3 http://archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB] ... Fetched 17.2 MB in 2s (7860 kB/s) Reading package lists... ... The following additional packages will be installed: chafa dbus fontconfig-config fonts-dejavu-core fonts-droid-fallback fonts-noto-mono fonts-urw-base35 ghostscript gsfonts imagemagick-6-common krb5-locales libapparmor1 libavahi-client3 libavahi-common-data libavahi-common3 libbsd0 libchafa0 libcups2 libdbus-1-3 libexpat1 libfftw3-double3 libfontconfig1 libfreetype6 libglib2.0-0 libglib2.0-data libgomp1 libgs9 libgs9-common libgssapi-krb5-2 libicu66 libidn11 libijs-0.35 libjbig0 libjbig2dec0 libjpeg-turbo8 libjpeg8 libk5crypto3 libkeyutils1 libkrb5-3 libkrb5support0 liblcms2-2 liblqr-1-0 libltdl7 libmagickcore-6.q16-6 libmagickwand-6.q16-6 libopenjp2-7 libpaper-utils libpaper1 libpng16-16 libssl1.1 libtiff5 libwebp6 libwebpmux3 libx11-6 libx11-data libxau6 libxcb1 libxdmcp6 libxext6 libxml2 poppler-data shared-mime-info tzdata ucf xdg-user-dirs Suggested packages: default-dbus-session-bus | dbus-session-bus fonts-noto fonts-freefont-otf | fonts-freefont-ttf fonts-texgyre ghostscript-x cups-common libfftw3-bin libfftw3-dev krb5-doc krb5-user liblcms2-utils libmagickcore-6.q16-6-extra poppler-utils fonts-japanese-mincho | fonts-ipafont-mincho fonts-japanese-gothic | fonts-ipafont-gothic fonts-arphic-ukai fonts-arphic-uming fonts-nanum The following NEW packages will be installed: chafa dbus fontconfig-config fonts-dejavu-core fonts-droid-fallback fonts-noto-mono fonts-urw-base35 ghostscript gsfonts imagemagick-6-common krb5-locales libapparmor1 libavahi-client3 libavahi-common-data libavahi-common3 libbsd0 libchafa0 libcups2 libdbus-1-3 libexpat1 libfftw3-double3 libfontconfig1 libfreetype6 libglib2.0-0 libglib2.0-data libgomp1 libgs9 libgs9-common libgssapi-krb5-2 libicu66 libidn11 libijs-0.35 libjbig0 libjbig2dec0 libjpeg-turbo8 libjpeg8 libk5crypto3 libkeyutils1 libkrb5-3 libkrb5support0 liblcms2-2 liblqr-1-0 libltdl7 libmagickcore-6.q16-6 libmagickwand-6.q16-6 libopenjp2-7 libpaper-utils libpaper1 libpng16-16 libssl1.1 libtiff5 libwebp6 libwebpmux3 libx11-6 libx11-data libxau6 libxcb1 libxdmcp6 libxext6 libxml2 neofetch poppler-data shared-mime-info tzdata ucf xdg-user-dirs 0 upgraded, 66 newly installed, 0 to remove and 6 not upgraded. Need to get 36.2 MB of archives. After this operation, 136 MB of additional disk space will be used. Get:1 http://archive.ubuntu.com/ubuntu focal/main amd64 fonts-droid-fallback all 1:6.0.1r16-1.1 [1805 kB] ... Get:66 http://archive.ubuntu.com/ubuntu focal/universe amd64 neofetch all 7.0.0-1 [77.5 kB] Fetched 36.2 MB in 2s (22.1 MB/s) ... Setting up ghostscript (9.50~dfsg-5ubuntu4.2) ... Processing triggers for libc-bin (2.31-0ubuntu9.1) ... STEP 3: COMMIT neofetch-ubuntu:20.04 --> 6486fa42efe 6486fa42efe5df4f761f4062d4986b7ec60b14d9d99d92d2aff2c26da61d13af

Note

You might need sudo to run this command.

As you can see, we just successfully built the container. We also got a hash as a name for it.

If you were careful, I used the && command instead of using multiple RUN. You can use as many RUN commands ase you like.

But be careful, each one of those commands creates a layer. The more layers you create, the more time they require to download*/*upload.

It might not seem to be a lot of time to download a few extra layer on one system. But if we talk about container orchestration platforms, it makes a big difference there.

Let's examine the build a bit more and see what we got.

STEP 1: FROM ubuntu:20.04 STEP 2: RUN apt-get update && apt-get install -y neofetch

The first step was to download the base image so we could use it, then we added a layer which insatlled neofetch. If we list our images.

podman imagesREPOSITORY TAG IMAGE ID CREATED SIZE localhost/neofetch-ubuntu 20.04 6486fa42efe5 5 minutes ago 241 MB docker.io/library/ubuntu 20.04 f63181f19b2f 5 weeks ago 75.3 MB

We can see that we have localhost/neofetch-ubuntu. If we examine the ID, we can see that it is the same as the one given to us at the end of the build.

Now that we created a brand-spanking-new image, we can run it.

podman imagesREPOSITORY TAG IMAGE ID CREATED SIZE localhost/neofetch-ubuntu 20.04 6486fa42efe5 6 minutes ago 241 MB docker.io/library/ubuntu 20.04 f63181f19b2f 5 weeks ago 75.3 MB

First we list our images. Then we choose which one to run.

podman run -it neofetch-ubuntu:20.04 neofetch

neofetch is installed in that container, because the image has it.

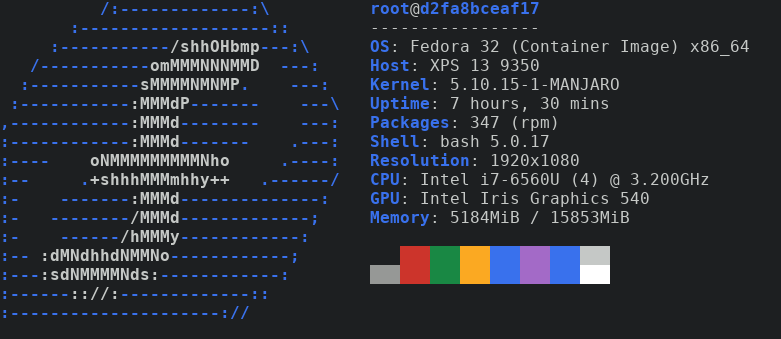

We can also build an image based on something else, maybe Fedora ?

I looked in Dockerhub (Fedora) and found the following image.

FROM fedora:32

RUN dnf install -y neofetchWe can duplicate what we did before real quick. Save file, run command to build the image.

podman build -t neofetch-fedora:20.04 -f Dockerfile.fedora .STEP 1: FROM fedora:32 STEP 2: RUN dnf install -y neofetch Fedora 32 openh264 (From Cisco) - x86_64 2.2 kB/s | 2.5 kB 00:01 Fedora Modular 32 - x86_64 4.1 MB/s | 4.9 MB 00:01 Fedora Modular 32 - x86_64 - Updates 4.9 MB/s | 4.4 MB 00:00 Fedora 32 - x86_64 - Updates 9.0 MB/s | 29 MB 00:03 Fedora 32 - x86_64 9.8 MB/s | 70 MB 00:07 Dependencies resolved. ======================================================================================== Package Arch Version Repo Size ======================================================================================== Installing: neofetch noarch 7.1.0-3.fc32 updates 90 k Installing dependencies: ImageMagick-libs x86_64 1:6.9.11.27-1.fc32 updates 2.3 M LibRaw x86_64 0.19.5-4.fc32 updates 320 k ... xorg-x11-utils x86_64 7.5-34.fc32 fedora 108 k Transaction Summary ======================================================================================== Install 183 Packages Total download size: 62 M Installed size: 203 M Downloading Packages: (1/183): LibRaw-0.19.5-4.fc32.x86_64.rpm 480 kB/s | 320 kB 00:00 ... xorg-x11-utils-7.5-34.fc32.x86_64 Complete! STEP 3: COMMIT neofetch-fedora:20.04 --> a5e57f6d5f1 a5e57f6d5f13075a105e02000e00589bab50d913900ee60399cd5a092ceca5a3

Then, run the container.

podman run -it neofetch-fedora:20.04 neofetch

Conclusion

Finally thought before I let you go. You may have noticed that I used Podman instead of Docker. In these examples, both commands should be interchangeable.

Remember kids, containers are cool! They can be used for a wide variety of things. They are great at many things and with the help of container orchestration platforms, they can scale better than ever. They are also very bad at certain things. Be careful where to use them, how to use and when to use them. Stay safe and mainly have fun!

DONE Playing with containers and Tor docker linux @text_editors ubuntu fedora proxy privoxy

As my followers well know, by now, I am a tinkerer at heart. Why do I do things ? No one knows ! I don't even know.

All I know, all I can tell you is that I like to see what can I do with the tools I have at hand. How can I bend them to my will. Why, you may ask. The answer is a bit complicated; part of who I am, part of what I do as a DevOps. End line is, this time I was curious.

I went down a road that taught me so much more about containers, docker, docker-compose and even Linux itself.

The question I had was simple, can I run a container only through Tor running in another container?

Tor

I usually like to start topics that I haven't mentioned before with definitions. In this case, what is Tor, you may ask ?

Tor is free software and an open network that helps you defend against traffic analysis, a form of network surveillance that threatens personal freedom and privacy, confidential business activities and relationships, and state security.

Although that home page is obscure because it was replaced by the new design of the website. Although I love what Tor has done with all the services they offer, don't get me wrong. But giving so much importance on the browser only and leaving the rest for dead when it comes to website, I have to say, I'm a bit sad.

Anyway, let's share the love for Tor and thank them for the beautiful project they offered humanity.

Now that we thanked them, let's abuse it.

Tor in a container

The task I set to discover relied on Tor being containerized. The first thing I do is, simply, not re-invent the wheel. Let's find out if someone already took that task.

With a litte bit of search, I found the dperson/torproxy docker image. It isn't ideal but I believe it is written to be rebuilt.

Can we run it ?

docker run -it -p 127.0.0.1:8118:8118 -d dperson/torproxycurl -Lx http://localhost:8118 http://jsonip.com/And this is definitely not your IP. Don't take my word for it! Go to http://jsonip.com/ in a browser and see for yourself.

Now that we know we can run Tor in a container effectively, let's kick it up a notch.

docker-compose

I will be testing and making changes as I go along. For this reason, it's a good idea to use docker-compose to do this.

Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration.

Now that we saw what the docker team has to say about docker-compose, let's go ahead and use it.

First, let's implement what we just ran ad-hoc in docker-compose.

---

version: '3.9'

services:

torproxy:

image: dperson/torproxy

container_name: torproxy

restart: unless-stoppedAir-gapped container

The next piece of the puzzle is to figure out if and how can we create an air-gapped container.

It turns out, we can create an internal network in docker that has no access to the internet.

First, the air-gapped container.

air-gapped:

image: ubuntu

container_name: air-gapped

restart: unless-stopped

command:

- bash

- -c

- sleep infinity

networks:

- no-internetThen comes the network.

networks:

no-internet:

driver: bridge

internal: true

Let's put it all together in a docker-compose.yaml file and run it.

docker-compose up -dKeep that terminal open, and let's put the hypothesis to the test and see if rises up to be a theory.

docker exec air-gapped apt-get updateAaaaand…

Err:1 http://archive.ubuntu.com/ubuntu focal InRelease

Temporary failure resolving 'archive.ubuntu.com'

Err:2 http://security.ubuntu.com/ubuntu focal-security InRelease

Temporary failure resolving 'security.ubuntu.com'

Err:3 http://archive.ubuntu.com/ubuntu focal-updates InRelease

Temporary failure resolving 'archive.ubuntu.com'

Err:4 http://archive.ubuntu.com/ubuntu focal-backports InRelease

Temporary failure resolving 'archive.ubuntu.com'

Reading package lists...

W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/focal/InRelease Temporary failure resolving 'archive.ubuntu.com'

W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/focal-updates/InRelease Temporary failure resolving 'archive.ubuntu.com'

W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/focal-backports/InRelease Temporary failure resolving 'archive.ubuntu.com'

W: Failed to fetch http://security.ubuntu.com/ubuntu/dists/focal-security/InRelease Temporary failure resolving 'security.ubuntu.com'

W: Some index files failed to download. They have been ignored, or old ones used instead.looks like it's real peeps, hooray !

Putting everything together

Okay, now let's put everything together. The list of changes we need to make are minimal. First, I will list them, then I will simply write them out in docker-compose.

- Create an

internetnetwork for the Tor container - Attach the

internetnetwork to the Tor container - Attach the

no-internetnetwork to the Tor container so that our air-gapped container can access it.

Let's get to work.

---

version: '3.9'

services:

torproxy:

image: dperson/torproxy

container_name: torproxy

restart: unless-stopped

networks:

- no-internet

- internet

air-gapped:

image: ubuntu

container_name: air-gapped

restart: unless-stopped

command:

- bash

- -c

- sleep infinity

networks:

- no-internet

networks:

no-internet:

driver: bridge

internal: true

internet:

driver: bridge

internal: falseRun everything.

docker-compose up -dYes, this will run it in the background and there is no need for you to open another terminal. It's always good to know both ways. Anyway, let's test.

let's exec into the container.

docker exec -it air-gapped bash

Then we configure apt to use our torproxy service.

echo 'Acquire::http::Proxy "http://torproxy:8118/";' > /etc/apt/apt.conf.d/proxy

echo "export HTTP_PROXY=http://torproxy:8118/" >> ~/.bashrc

echo "export HTTPS_PROXY=http://torproxy:8118/" >> ~/.bashrc

export HTTP_PROXY=http://torproxy:8118/

export HTTPS_PROXY=http://torproxy:8118/

apt-get update

apt-get upgrade -y

DEBIAN_FRONTEND=noninteractive apt-get install -y curlHarvesting the fruits of our labour

First, we always check if everything is set correctly.

While inside the container, we check the environment variables.

env | grep HTTPYou should see.

HTTPS_PROXY=http://torproxy:8118/ HTTP_PROXY=http://torproxy:8118/

Then, we curl our IP.

curl https://jsonip.com/And that is also not your IP.

It works !

Conclusion

Is it possible to route a container through another Tor container ?

The answer is obviously Yes and this is the way to do it. Enjoy.

DONE Let's play with Traefik docker linux traefik nginx ssl letsencrypt

I've been playing around with containers for a few years now. I find them very useful. If you host your own, like I do, you probably write a lot of nginx configurations, maybe apache.

If that's the case, then you have your own solution to get certificates. I'm also assuming that you are using let's encrypt with certbot or something.

Well, I didn't want to anymore. It was time to consolidate. Here comes Traefik.

Traefik

So Traefik is

an open-source Edge Router that makes publishing your services a fun and easy experience. It receives requests on behalf of your system and finds out which components are responsible for handling them.

Which made me realize, I still need nginx somewhere. We'll see when we get to it. Let's focus on Traefik.

Configuration

If you run a lot of containers and manage them, then you probably use docker-compose.

I'm still using version 2.3, I know I am due to an upgrade but I'm working on it slowly.

It's a bigger project… One step at a time.

Let's start from the top, literally.

---

version: '2.3'

services:Note

Upgrading to version 3.x of docker-compose requires the creation of network to link containers together. It's worth investing into, this is not a docker-compose tutorial.

Then comes the service.

traefik:

container_name: traefik

image: "traefik:latest"

restart: unless-stopped

mem_limit: 40m

mem_reservation: 25mand of course, who can forget the volume mounting.

volumes:

- "/var/run/docker.sock:/var/run/docker.sock:ro"Design

Now let's talk design to see how we're going to configuse this bad boy.

I want to Traefik to listen on ports 80 and 443 at a minimum to serve traffic.

Let's do that.

command:

- --entrypoints.web.address=:80

- --entrypoints.websecure.address=:443and let's not forget to map them.

ports:

- "80:80"

- "443:443"

Next, we would like to redirect http to https always.

- --entrypoints.web.http.redirections.entryPoint.to=websecure

- --entrypoints.web.http.redirections.entryPoint.scheme=httpsWe are using docker, so let's configure that as the provider.

- --providers.dockerWe can set the log level.

- --log.level=INFOIf you want a dashboard, you have to enable it.

- --api.dashboard=trueAnd finally, if you're using Prometheus to scrape metrics… You have to enable that too.

- --metrics.prometheus=trueLet's Encrypt

Let's talk TLS. You want to serve encrypted traffic to users. You will need an SSL Certificate.

Your best bet is open source. Who are we kidding, you'd want to go with let's encrypt.

Let's configure acme to do just that. Get us certificates. In this example, we are going to be using Cloudflare.

- --certificatesresolvers.cloudflareresolver.acme.email=<your@email.here>

- --certificatesresolvers.cloudflareresolver.acme.dnschallenge.provider=cloudflare

- --certificatesresolvers.cloudflareresolver.acme.storage=./acme.jsonwarning

Let's Encrypt have set limits on how many certificates you can request per certain amount of time. To test your certificate request and renewal processes, use their staging infrastructure. It is made for such purpose.

Then we mount it, for persistence.

- "./traefik/acme.json:/acme.json"Let's not forget to add our Cloudflare API credentials as environment variables for Traefik to use.

environment:

- CLOUDFLARE_EMAIL=<your-cloudflare@email.here>

- CLOUDFLARE_API_KEY=<your-api-key-goes-here>Dashboard

Now let's configure Traefik a bit more with a bit of labeling.

First, we specify the host Traefik should listen for to service the dashboard.

labels:

- "traefik.http.routers.dashboard-api.rule=Host(`dashboard.your-host.here`)"

- "traefik.http.routers.dashboard-api.service=api@internal"

With a little bit of Traefik documentation searching and a lot of help from htpasswd, we can create a basicauth login to protect the dashboard from public use.

- "traefik.http.routers.dashboard-api.middlewares=dashboard-auth-user"

- "traefik.http.middlewares.dashboard-auth-user.basicauth.users=<user>:$$pws5$$rWsEfeUw9$$uV45uwsGeaPbu8RSexB9/"

- "traefik.http.routers.dashboard-api.tls.certresolver=cloudflareresolver"Middleware

I'm not going to go into details about the middleware flags configured here but you're welcome to check the Traefik middleware docs.

- "traefik.http.middlewares.frame-deny.headers.framedeny=true"

- "traefik.http.middlewares.browser-xss-filter.headers.browserxssfilter=true"

- "traefik.http.middlewares.ssl-redirect.headers.sslredirect=true"Full Configuration

Let's put everything together now.

<<docker-compose-service-traefik>>

<<docker-compose-traefik-port-mapping>>

<<docker-compose-traefik-config-listeners>>

<<docker-compose-traefik-config-https-redirect>>

<<docker-compose-traefik-config-provider>>

<<docker-compose-traefik-config-log-level>>

<<docker-compose-traefik-config-dashboard>>

<<docker-compose-traefik-config-prometheus>>

<<docker-compose-traefik-config-acme>>

<<docker-compose-traefik-volumes>>

<<docker-compose-traefik-volumes-acme>>

<<docker-compose-traefik-environment>>

<<docker-compose-traefik-labels>>

<<docker-compose-traefik-labels-basicauth>>

<<docker-compose-traefik-config-middleware>>nginx

nginx pronounced

[engine x] is an HTTP and reverse proxy server, a mail proxy server, and a generic TCP/UDP proxy server, originally written by Igor Sysoev.

In this example, we're going to assume you have a static blog generated by a static blog generator of your choice and you would like to serve it for people to read it.

So let's do this quickly as there isn't much to tell except when it comes to labels.

nginx:

container_name: nginx

image: nginxinc/nginx-unprivileged:alpine

restart: unless-stopped

mem_limit: 8m

command: ["nginx", "-enable-prometheus-metrics", "-g", "daemon off;"]

volumes:

- "./blog/:/usr/share/nginx/html/blog:ro"

- "./nginx/default.conf.template:/etc/nginx/templates/default.conf.template:ro"

environment:

- NGINX_BLOG_PORT=80

- NGINX_BLOG_HOST=<blog.your-host.here>

We are mounting the blog directory from our host to /usr/share/nginx/html/blog as read-only into the nginx container. We are also providing nginx with a template configuration and passing the variables as environment variables as you noticed. It is also mounted as read-only. The configuration template looks like the following, if you're wondering.

server {

listen ${NGINX_BLOG_PORT};

server_name localhost;

root /usr/share/nginx/html/${NGINX_BLOG_HOST};

location / {

index index.html;

try_files $uri $uri/ =404;

}

}Traefik configuration

So, Traefik configuration at this point is a little bit tricky for the first time.

First, we configure the host like we did before.

labels:

- "traefik.http.routers.blog-http.rule=Host(`blog.your-host.here`)"We tell Traefik about our service and the port to loadbalance on.

- "traefik.http.routers.blog-http.service=blog-http"

- "traefik.http.services.blog-http.loadbalancer.server.port=80"We configure the middleware to use configuration defined in the Traefik middleware configuration section.

- "traefik.http.routers.blog-http.middlewares=blog-main"

- "traefik.http.middlewares.blog-main.chain.middlewares=frame-deny,browser-xss-filter,ssl-redirect"Finally, we tell it about our resolver to generate an SSL Certificate.

- "traefik.http.routers.blog-http.tls.certresolver=cloudflareresolver"Full Configuration

Let's put the nginx service together.

<<docker-compose-service-nginx>>

<<docker-compose-nginx-labels>>

<<docker-compose-nginx-labels-service>>

<<docker-compose-nginx-labels-middleware>>

<<docker-compose-nginx-labels-tls>>Finale

It's finally time to put everything together !

<<docker-compose-header>>

<<docker-compose-traefik>>

<<docker-compose-nginx>>

Now we're all set to save it in a docker-compose.yaml file and

docker-compose up -dIf everything is configured correctly, your blog should pop-up momentarily. Enjoy !

K3s @k3s

DONE Building k3s on a Pi arm kubernetes

I have had a Pi laying around used for a simple task for a while now. A few days ago, I was browsing the web, learning more about privacy, when I stumbled upon AdGuard Home.

I have been using it as my internal DNS on top of the security and privacy layers I add to my machine. Its benefits can be argued but it is a DNS after all and I wanted to see what else it can do for me. Anyway, I digress. I searched to see if I could find a container for AdGuard Home and I did.

At this point, I started thinking about what I could do to make the Pi more useful.

That's when k3s came into the picture.

Pre-requisites

As this is not a Pi tutorial, I am going to be assuming that you have a Raspberry Pi with Raspberry Pi OS Buster installed on it. The assumption does not mean you cannot install any other OS on the Pi and run this setup. It only means that I have tested this on Buster and that your milage will vary.

Prepare the Pi

Now that you have Buster already installed, let's go ahead and fix a small default configuration issue with it.

K3s uses iptables to route things around correctly. Buster uses nftables by default, let's switch it to iptables.

$ sudo iptables -F $ sudo update-alternatives --set iptables /usr/sbin/iptables-legacy $ sudo update-alternatives --set ip6tables /usr/sbin/ip6tables-legacy $ sudo reboot

At this point, your Pi should reboot. Your OS is configured for the next step.

Pre-install Configuration

After testing k3s a few times, I found out that by default it will deploy a few extra services like Traefik.

Unfortunately, just like anything the default configuration is just that. It's plain and not very useful from the start. You will need to tweak it.

This step could be done either post or pre deploy. Figuring out the pre-deploy is a bit more involving but a bit more fun as well.

The first thing you need to know is that the normal behavior of k3s is to deploy anything found in /var/lib/rancher/k3s/server/manifests/.

So a good first step is, of course, to proceed with creating that.

$ mkdir -p /var/lib/rancher/k3s/server/manifests/

The other thing to know is that k3s can deploy Helm Charts. It will create the manifests it will deploy by default, before beginning the setup, in the manifest path I mentioned. If you would like to see what it deployed and how, visit that path after k3s runs. I did, and I took their configuration of Traefik which I was unhappy with its defaults.

My next step was securing the defaults as much as possible and I found out that Traefik can do basic authentication. As a starting point, that's great. Let's create the credentials.

$ htpasswd -c ./auth myUser

That was easy so far. Let's turn up the notch and create the manifest for k3s.

Create traefik.yaml in /var/lib/rancher/k3s/server/manifests/ with the following content.

---

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: traefik

namespace: kube-system

spec:

chart: https://%{KUBERNETES_API}%/static/charts/traefik-1.81.0.tgz

valuesContent: |-

rbac:

enabled: true

ssl:

enabled: true

dashboard:

enabled: true

domain: traefik-ui.example.com

auth:

basic:

myUser: $ars3$4A5tdstr$trSDDa4467Tsa54sTs.

metrics:

prometheus:

enabled: false

kubernetes:

ingressEndpoint:

useDefaultPublishedService: true

image: "rancher/library-traefik"

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"It's a Pi, I don't need prometheus so I disabled it. I also enabled the dashboard and added the credentials we created in the previous step.

Now, the Helm Chart will deploy an ingress and expose the dashboard for you on the value of domain.

Note

I figured out the values to set in valuesContent by reading the Helm Chart

K3s

If everything is in place, you are ready to proceed. You can install k3s, now, but before I get to that step, I will say a few things about k3s.

K3s has a smaller feature set than k8s, hence the smaller footprint. Read the documentation to see if you need any of the missing features. The second thing to mention is that k3s is a one binary deploy that uses containerd. That's why we will use the script installation method as it adds the necessary systemd configuration for us. It is a nice gesture.

Let's do that, shall we ?

$ curl -sfL https://get.k3s.io | sh -s - --no-deploy traefik

Note

We need to make sure that k3s does not deploy its own traefik but ours.

Make sure to add --no-deploy traefik to our deployment command.

Point traefik.example.com to your Pi IP in /etc/hosts on your machine.

traefik.example.com 192.168.0.5

When the installation command is done, you should be able to visit http://traefik.example.com/