mirror of

https://github.com/actions/setup-python.git

synced 2024-11-22 14:48:36 +00:00

This reverts commit bdd6409dc1.

This commit is contained in:

parent

bdd6409dc1

commit

948e5343c7

187 changed files with 15033 additions and 6120 deletions

12

.github/workflows/lint-yaml.yml

vendored

12

.github/workflows/lint-yaml.yml

vendored

|

|

@ -1,12 +0,0 @@

|

|||

name: Lint YAML

|

||||

on: [pull_request]

|

||||

jobs:

|

||||

lint:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@master

|

||||

- name: Lint action.yml

|

||||

uses: ibiqlik/action-yamllint@master

|

||||

with:

|

||||

file_or_dir: action.yml

|

||||

config_file: yaml-lint-config.yml

|

||||

4

.github/workflows/workflow.yml

vendored

4

.github/workflows/workflow.yml

vendored

|

|

@ -1,5 +1,5 @@

|

|||

name: Main workflow

|

||||

on: [push, pull_request]

|

||||

on: [push]

|

||||

jobs:

|

||||

run:

|

||||

name: Run

|

||||

|

|

@ -14,7 +14,7 @@ jobs:

|

|||

- name: Set Node.js 10.x

|

||||

uses: actions/setup-node@master

|

||||

with:

|

||||

node-version: 10.x

|

||||

version: 10.x

|

||||

|

||||

- name: npm install

|

||||

run: npm install

|

||||

|

|

|

|||

7

.gitignore

vendored

7

.gitignore

vendored

|

|

@ -1,10 +1,7 @@

|

|||

# Ignore node_modules, ncc is used to compile nodejs modules into a single file in the releases branch

|

||||

node_modules/

|

||||

# Explicitly not ignoring node_modules so that they are included in package downloaded by runner

|

||||

!node_modules/

|

||||

__tests__/runner/*

|

||||

|

||||

# Ignore js files that are transpiled from ts files in src/

|

||||

lib/

|

||||

|

||||

# Rest of the file pulled from https://github.com/github/gitignore/blob/master/Node.gitignore

|

||||

# Logs

|

||||

logs

|

||||

|

|

|

|||

77

README.md

77

README.md

|

|

@ -16,11 +16,11 @@ See [action.yml](action.yml)

|

|||

Basic:

|

||||

```yaml

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- uses: actions/checkout@master

|

||||

- uses: actions/setup-python@v1

|

||||

with:

|

||||

python-version: '3.x' # Version range or exact version of a Python version to use, using SemVer's version range syntax

|

||||

architecture: 'x64' # optional x64 or x86. Defaults to x64 if not specified

|

||||

python-version: '3.x' # Version range or exact version of a Python version to use, using semvers version range syntax.

|

||||

architecture: 'x64' # (x64 or x86)

|

||||

- run: python my_script.py

|

||||

```

|

||||

|

||||

|

|

@ -28,13 +28,13 @@ Matrix Testing:

|

|||

```yaml

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

runs-on: ubuntu-16.04

|

||||

strategy:

|

||||

matrix:

|

||||

python-version: [ '2.x', '3.x', 'pypy2', 'pypy3' ]

|

||||

name: Python ${{ matrix.python-version }} sample

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- uses: actions/checkout@master

|

||||

- name: Setup python

|

||||

uses: actions/setup-python@v1

|

||||

with:

|

||||

|

|

@ -43,73 +43,6 @@ jobs:

|

|||

- run: python my_script.py

|

||||

```

|

||||

|

||||

Exclude a specific Python version:

|

||||

```yaml

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

matrix:

|

||||

os: [ubuntu-latest, macos-latest, windows-latest]

|

||||

python-version: [2.7, 3.6, 3.7, 3.8, pypy2, pypy3]

|

||||

exclude:

|

||||

- os: macos-latest

|

||||

python-version: 3.8

|

||||

- os: windows-latest

|

||||

python-version: 3.6

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Set up Python

|

||||

uses: actions/setup-python@v1

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Display Python version

|

||||

run: python -c "import sys; print(sys.version)"

|

||||

```

|

||||

|

||||

# Getting started with Python + Actions

|

||||

|

||||

Check out our detailed guide on using [Python with GitHub Actions](https://help.github.com/en/actions/automating-your-workflow-with-github-actions/using-python-with-github-actions).

|

||||

|

||||

# Hosted Tool Cache

|

||||

|

||||

GitHub hosted runners have a tools cache that comes with Python + PyPy already installed. This tools cache helps speed up runs and tool setup by not requiring any new downloads. There is an environment variable called `RUNNER_TOOL_CACHE` on each runner that describes the location of this tools cache and there is where you will find Python and PyPy installed. `setup-python` works by taking a specific version of Python or PyPy in this tools cache and adding it to PATH.

|

||||

|

||||

|| Location |

|

||||

|------|-------|

|

||||

|**Tool Cache Directory** |`RUNNER_TOOL_CACHE`|

|

||||

|**Python Tool Cache**|`RUNNER_TOOL_CACHE/Python/*`|

|

||||

|**PyPy Tool Cache**|`RUNNER_TOOL_CACHE/PyPy/*`|

|

||||

|

||||

GitHub virtual environments are setup in [actions/virtual-environments](https://github.com/actions/virtual-environments). During the setup, the available versions of Python and PyPy are automatically downloaded, setup and documented.

|

||||

- [Tools cache setup for Ubuntu](https://github.com/actions/virtual-environments/blob/master/images/linux/scripts/installers/hosted-tool-cache.sh)

|

||||

- [Tools cache setup for Windows](https://github.com/actions/virtual-environments/blob/master/images/win/scripts/Installers/Download-ToolCache.ps1)

|

||||

|

||||

# Using `setup-python` with a self hosted runner

|

||||

|

||||

If you would like to use `setup-python` on a self-hosted runner, you will need to download all versions of Python & PyPy that you would like and setup a similar tools cache locally for your runner.

|

||||

|

||||

- Create an global environment variable called `AGENT_TOOLSDIRECTORY` that will point to the root directory of where you want the tools installed. The env variable is preferrably global as it must be set in the shell that will install the tools cache, along with the shell that the runner will be using.

|

||||

- This env variable is used internally by the runner to set the `RUNNER_TOOL_CACHE` env variable

|

||||

- Example for Administrator Powershell: `[System.Environment]::SetEnvironmentVariable("AGENT_TOOLSDIRECTORY", "C:\hostedtoolcache\windows", [System.EnvironmentVariableTarget]::Machine)` (restart the shell afterwards)

|

||||

- Download the appropriate NPM packages from the [GitHub Actions NPM registry](https://github.com/orgs/actions/packages)

|

||||

- Make sure to have `npm` installed, and then [configure npm for use with GitHub packages](https://help.github.com/en/packages/using-github-packages-with-your-projects-ecosystem/configuring-npm-for-use-with-github-packages#authenticating-to-github-package-registry)

|

||||

- Create an empty npm project for easier installation (`npm init`) in the tools cache directory. You can delete `package.json`, `package.lock.json` and `node_modules` after all tools get installed

|

||||

- Before downloading a specific package, create an empty folder for the version of Python/PyPY that is being installed. If downloading Python 3.6.8 for example, create `C:\hostedtoolcache\windows\Python\3.6.8`

|

||||

- Once configured, download a specific package by calling `npm install`. Note (if downloading a PyPy package on Windows, you will need 7zip installed along with `7z.exe` added to your PATH)

|

||||

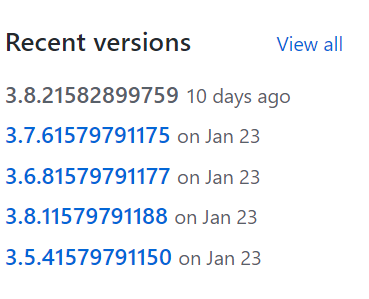

- Each NPM package has multiple versions that determine the version of Python or PyPy that should be installed.

|

||||

- `npm install @actions/toolcache-python-windows-x64@3.7.61579791175` for example installs Python 3.7.6 while `npm install @actions/toolcache-python-windows-x64@3.6.81579791177` installs Python 3.6.8

|

||||

- You can browse and find all available versions of a package by searching the GitHub Actions NPM registry

|

||||

|

||||

|

||||

# Using Python without `setup-python`

|

||||

|

||||

`setup-python` helps keep your dependencies explicit and ensures consistent behavior between different runners. If you use `python` in a shell on a GitHub hosted runner without `setup-python` it will default to whatever is in PATH. The default version of Python in PATH vary between runners and can change unexpectedly so we recommend you always use `setup-python`.

|

||||

|

||||

# Available versions of Python

|

||||

|

||||

For detailed information regarding the available versions of Python that are installed see [Software installed on GitHub-hosted runners](https://help.github.com/en/actions/automating-your-workflow-with-github-actions/software-installed-on-github-hosted-runners)

|

||||

|

||||

# License

|

||||

|

||||

The scripts and documentation in this project are released under the [MIT License](LICENSE)

|

||||

|

|

|

|||

13

action.yml

13

action.yml

|

|

@ -1,20 +1,13 @@

|

|||

---

|

||||

name: 'Setup Python'

|

||||

description: 'Set up a specific version of Python and add the command-line tools to the PATH.'

|

||||

description: 'Set up a specific version of Python and add the command-line tools to the PATH'

|

||||

author: 'GitHub'

|

||||

inputs:

|

||||

python-version:

|

||||

description: "Version range or exact version of a Python version to use, using SemVer's version range syntax."

|

||||

description: 'Version range or exact version of a Python version to use, using semvers version range syntax.'

|

||||

default: '3.x'

|

||||

architecture:

|

||||

description: 'The target architecture (x86, x64) of the Python interpreter.'

|

||||

default: 'x64'

|

||||

outputs:

|

||||

python-version:

|

||||

description: "The installed python version. Useful when given a version range as input."

|

||||

runs:

|

||||

using: 'node12'

|

||||

main: 'dist/index.js'

|

||||

branding:

|

||||

icon: 'code'

|

||||

color: 'yellow'

|

||||

main: 'lib/setup-python.js'

|

||||

|

|

|

|||

4926

dist/index.js

vendored

4926

dist/index.js

vendored

File diff suppressed because it is too large

Load diff

|

|

@ -2,30 +2,21 @@

|

|||

|

||||

### Checkin

|

||||

|

||||

- Do check in source (`src/`)

|

||||

- Do check in a single `index.js` file after running `ncc`

|

||||

- Do not check in `node_modules/`

|

||||

- Do checkin source (src)

|

||||

- Do checkin build output (lib)

|

||||

- Do checkin runtime node_modules

|

||||

- Do not checkin devDependency node_modules (husky can help see below)

|

||||

|

||||

### NCC

|

||||

### devDependencies

|

||||

|

||||

In order to avoid uploading `node_modules/` to the repository, we use [zeit/ncc](https://github.com/zeit/ncc) to create a single `index.js` file that gets saved in `dist/`.

|

||||

In order to handle correctly checking in node_modules without devDependencies, we run [Husky](https://github.com/typicode/husky) before each commit.

|

||||

This step ensures that formatting and checkin rules are followed and that devDependencies are excluded. To make sure Husky runs correctly, please use the following workflow:

|

||||

|

||||

### Developing

|

||||

|

||||

If you're developing locally, you can run

|

||||

```

|

||||

npm install

|

||||

tsc

|

||||

ncc build

|

||||

npm install # installs all devDependencies including Husky

|

||||

git add abc.ext # Add the files you've changed. This should include files in src, lib, and node_modules (see above)

|

||||

git commit -m "Informative commit message" # Commit. This will run Husky

|

||||

```

|

||||

Any files generated using `tsc` will be added to `lib/`, however those files also are not uploaded to the repository and are exluded using `.gitignore`.

|

||||

|

||||

During the commit step, Husky will take care of formatting all files with [Prettier](https://github.com/prettier/prettier)

|

||||

|

||||

### Testing

|

||||

|

||||

We ask that you include a link to a successful run that utilizes the changes you are working on. For example, if your changes are in the branch `newAwesomeFeature`, then show an example run that uses `setup-python@newAwesomeFeature` or `my-fork@newAwesomeFeature`. This will help speed up testing and help us confirm that there are no breaking changes or bugs.

|

||||

|

||||

### Releases

|

||||

|

||||

There is a `master` branch where contributor changes are merged into. There are also release branches such as `releases/v1` that are used for tagging (for example the `v1` tag) and publishing new versions of the action. Changes from `master` are periodically merged into a releases branch. You do not need to create any PR that merges changes from master into a releases branch.

|

||||

During the commit step, Husky will take care of formatting all files with [Prettier](https://github.com/prettier/prettier) as well as pruning out devDependencies using `npm prune --production`.

|

||||

It will also make sure these changes are appropriately included in your commit (no further work is needed)

|

||||

159

lib/find-python.js

Normal file

159

lib/find-python.js

Normal file

|

|

@ -0,0 +1,159 @@

|

|||

"use strict";

|

||||

var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) {

|

||||

return new (P || (P = Promise))(function (resolve, reject) {

|

||||

function fulfilled(value) { try { step(generator.next(value)); } catch (e) { reject(e); } }

|

||||

function rejected(value) { try { step(generator["throw"](value)); } catch (e) { reject(e); } }

|

||||

function step(result) { result.done ? resolve(result.value) : new P(function (resolve) { resolve(result.value); }).then(fulfilled, rejected); }

|

||||

step((generator = generator.apply(thisArg, _arguments || [])).next());

|

||||

});

|

||||

};

|

||||

var __importStar = (this && this.__importStar) || function (mod) {

|

||||

if (mod && mod.__esModule) return mod;

|

||||

var result = {};

|

||||

if (mod != null) for (var k in mod) if (Object.hasOwnProperty.call(mod, k)) result[k] = mod[k];

|

||||

result["default"] = mod;

|

||||

return result;

|

||||

};

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

const os = __importStar(require("os"));

|

||||

const path = __importStar(require("path"));

|

||||

const semver = __importStar(require("semver"));

|

||||

let cacheDirectory = process.env['RUNNER_TOOLSDIRECTORY'] || '';

|

||||

if (!cacheDirectory) {

|

||||

let baseLocation;

|

||||

if (process.platform === 'win32') {

|

||||

// On windows use the USERPROFILE env variable

|

||||

baseLocation = process.env['USERPROFILE'] || 'C:\\';

|

||||

}

|

||||

else {

|

||||

if (process.platform === 'darwin') {

|

||||

baseLocation = '/Users';

|

||||

}

|

||||

else {

|

||||

baseLocation = '/home';

|

||||

}

|

||||

}

|

||||

cacheDirectory = path.join(baseLocation, 'actions', 'cache');

|

||||

}

|

||||

const core = __importStar(require("@actions/core"));

|

||||

const tc = __importStar(require("@actions/tool-cache"));

|

||||

const IS_WINDOWS = process.platform === 'win32';

|

||||

// Python has "scripts" or "bin" directories where command-line tools that come with packages are installed.

|

||||

// This is where pip is, along with anything that pip installs.

|

||||

// There is a seperate directory for `pip install --user`.

|

||||

//

|

||||

// For reference, these directories are as follows:

|

||||

// macOS / Linux:

|

||||

// <sys.prefix>/bin (by default /usr/local/bin, but not on hosted agents -- see the `else`)

|

||||

// (--user) ~/.local/bin

|

||||

// Windows:

|

||||

// <Python installation dir>\Scripts

|

||||

// (--user) %APPDATA%\Python\PythonXY\Scripts

|

||||

// See https://docs.python.org/3/library/sysconfig.html

|

||||

function binDir(installDir) {

|

||||

if (IS_WINDOWS) {

|

||||

return path.join(installDir, 'Scripts');

|

||||

}

|

||||

else {

|

||||

return path.join(installDir, 'bin');

|

||||

}

|

||||

}

|

||||

// Note on the tool cache layout for PyPy:

|

||||

// PyPy has its own versioning scheme that doesn't follow the Python versioning scheme.

|

||||

// A particular version of PyPy may contain one or more versions of the Python interpreter.

|

||||

// For example, PyPy 7.0 contains Python 2.7, 3.5, and 3.6-alpha.

|

||||

// We only care about the Python version, so we don't use the PyPy version for the tool cache.

|

||||

function usePyPy(majorVersion, architecture) {

|

||||

const findPyPy = tc.find.bind(undefined, 'PyPy', majorVersion.toString());

|

||||

let installDir = findPyPy(architecture);

|

||||

if (!installDir && IS_WINDOWS) {

|

||||

// PyPy only precompiles binaries for x86, but the architecture parameter defaults to x64.

|

||||

// On Hosted VS2017, we only install an x86 version.

|

||||

// Fall back to x86.

|

||||

installDir = findPyPy('x86');

|

||||

}

|

||||

if (!installDir) {

|

||||

// PyPy not installed in $(Agent.ToolsDirectory)

|

||||

throw new Error(`PyPy ${majorVersion} not found`);

|

||||

}

|

||||

// For PyPy, Windows uses 'bin', not 'Scripts'.

|

||||

const _binDir = path.join(installDir, 'bin');

|

||||

// On Linux and macOS, the Python interpreter is in 'bin'.

|

||||

// On Windows, it is in the installation root.

|

||||

const pythonLocation = IS_WINDOWS ? installDir : _binDir;

|

||||

core.exportVariable('pythonLocation', pythonLocation);

|

||||

core.addPath(installDir);

|

||||

core.addPath(_binDir);

|

||||

}

|

||||

function useCpythonVersion(version, architecture) {

|

||||

return __awaiter(this, void 0, void 0, function* () {

|

||||

const desugaredVersionSpec = desugarDevVersion(version);

|

||||

const semanticVersionSpec = pythonVersionToSemantic(desugaredVersionSpec);

|

||||

core.debug(`Semantic version spec of ${version} is ${semanticVersionSpec}`);

|

||||

const installDir = tc.find('Python', semanticVersionSpec, architecture);

|

||||

if (!installDir) {

|

||||

// Fail and list available versions

|

||||

const x86Versions = tc

|

||||

.findAllVersions('Python', 'x86')

|

||||

.map(s => `${s} (x86)`)

|

||||

.join(os.EOL);

|

||||

const x64Versions = tc

|

||||

.findAllVersions('Python', 'x64')

|

||||

.map(s => `${s} (x64)`)

|

||||

.join(os.EOL);

|

||||

throw new Error([

|

||||

`Version ${version} with arch ${architecture} not found`,

|

||||

'Available versions:',

|

||||

x86Versions,

|

||||

x64Versions

|

||||

].join(os.EOL));

|

||||

}

|

||||

core.exportVariable('pythonLocation', installDir);

|

||||

core.addPath(installDir);

|

||||

core.addPath(binDir(installDir));

|

||||

if (IS_WINDOWS) {

|

||||

// Add --user directory

|

||||

// `installDir` from tool cache should look like $AGENT_TOOLSDIRECTORY/Python/<semantic version>/x64/

|

||||

// So if `findLocalTool` succeeded above, we must have a conformant `installDir`

|

||||

const version = path.basename(path.dirname(installDir));

|

||||

const major = semver.major(version);

|

||||

const minor = semver.minor(version);

|

||||

const userScriptsDir = path.join(process.env['APPDATA'] || '', 'Python', `Python${major}${minor}`, 'Scripts');

|

||||

core.addPath(userScriptsDir);

|

||||

}

|

||||

// On Linux and macOS, pip will create the --user directory and add it to PATH as needed.

|

||||

});

|

||||

}

|

||||

/** Convert versions like `3.8-dev` to a version like `>= 3.8.0-a0`. */

|

||||

function desugarDevVersion(versionSpec) {

|

||||

if (versionSpec.endsWith('-dev')) {

|

||||

const versionRoot = versionSpec.slice(0, -'-dev'.length);

|

||||

return `>= ${versionRoot}.0-a0`;

|

||||

}

|

||||

else {

|

||||

return versionSpec;

|

||||

}

|

||||

}

|

||||

/**

|

||||

* Python's prelease versions look like `3.7.0b2`.

|

||||

* This is the one part of Python versioning that does not look like semantic versioning, which specifies `3.7.0-b2`.

|

||||

* If the version spec contains prerelease versions, we need to convert them to the semantic version equivalent.

|

||||

*/

|

||||

function pythonVersionToSemantic(versionSpec) {

|

||||

const prereleaseVersion = /(\d+\.\d+\.\d+)((?:a|b|rc)\d*)/g;

|

||||

return versionSpec.replace(prereleaseVersion, '$1-$2');

|

||||

}

|

||||

exports.pythonVersionToSemantic = pythonVersionToSemantic;

|

||||

function findPythonVersion(version, architecture) {

|

||||

return __awaiter(this, void 0, void 0, function* () {

|

||||

switch (version.toUpperCase()) {

|

||||

case 'PYPY2':

|

||||

return usePyPy(2, architecture);

|

||||

case 'PYPY3':

|

||||

return usePyPy(3, architecture);

|

||||

default:

|

||||

return yield useCpythonVersion(version, architecture);

|

||||

}

|

||||

});

|

||||

}

|

||||

exports.findPythonVersion = findPythonVersion;

|

||||

37

lib/setup-python.js

Normal file

37

lib/setup-python.js

Normal file

|

|

@ -0,0 +1,37 @@

|

|||

"use strict";

|

||||

var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) {

|

||||

return new (P || (P = Promise))(function (resolve, reject) {

|

||||

function fulfilled(value) { try { step(generator.next(value)); } catch (e) { reject(e); } }

|

||||

function rejected(value) { try { step(generator["throw"](value)); } catch (e) { reject(e); } }

|

||||

function step(result) { result.done ? resolve(result.value) : new P(function (resolve) { resolve(result.value); }).then(fulfilled, rejected); }

|

||||

step((generator = generator.apply(thisArg, _arguments || [])).next());

|

||||

});

|

||||

};

|

||||

var __importStar = (this && this.__importStar) || function (mod) {

|

||||

if (mod && mod.__esModule) return mod;

|

||||

var result = {};

|

||||

if (mod != null) for (var k in mod) if (Object.hasOwnProperty.call(mod, k)) result[k] = mod[k];

|

||||

result["default"] = mod;

|

||||

return result;

|

||||

};

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

const core = __importStar(require("@actions/core"));

|

||||

const finder = __importStar(require("./find-python"));

|

||||

const path = __importStar(require("path"));

|

||||

function run() {

|

||||

return __awaiter(this, void 0, void 0, function* () {

|

||||

try {

|

||||

let version = core.getInput('python-version');

|

||||

if (version) {

|

||||

const arch = core.getInput('architecture', { required: true });

|

||||

yield finder.findPythonVersion(version, arch);

|

||||

}

|

||||

const matchersPath = path.join(__dirname, '..', '.github');

|

||||

console.log(`##[add-matcher]${path.join(matchersPath, 'python.json')}`);

|

||||

}

|

||||

catch (err) {

|

||||

core.setFailed(err.message);

|

||||

}

|

||||

});

|

||||

}

|

||||

run();

|

||||

15

node_modules/.bin/semver

generated

vendored

Normal file

15

node_modules/.bin/semver

generated

vendored

Normal file

|

|

@ -0,0 +1,15 @@

|

|||

#!/bin/sh

|

||||

basedir=$(dirname "$(echo "$0" | sed -e 's,\\,/,g')")

|

||||

|

||||

case `uname` in

|

||||

*CYGWIN*) basedir=`cygpath -w "$basedir"`;;

|

||||

esac

|

||||

|

||||

if [ -x "$basedir/node" ]; then

|

||||

"$basedir/node" "$basedir/../semver/bin/semver.js" "$@"

|

||||

ret=$?

|

||||

else

|

||||

node "$basedir/../semver/bin/semver.js" "$@"

|

||||

ret=$?

|

||||

fi

|

||||

exit $ret

|

||||

7

node_modules/.bin/semver.cmd

generated

vendored

Normal file

7

node_modules/.bin/semver.cmd

generated

vendored

Normal file

|

|

@ -0,0 +1,7 @@

|

|||

@IF EXIST "%~dp0\node.exe" (

|

||||

"%~dp0\node.exe" "%~dp0\..\semver\bin\semver.js" %*

|

||||

) ELSE (

|

||||

@SETLOCAL

|

||||

@SET PATHEXT=%PATHEXT:;.JS;=;%

|

||||

node "%~dp0\..\semver\bin\semver.js" %*

|

||||

)

|

||||

15

node_modules/.bin/uuid

generated

vendored

Normal file

15

node_modules/.bin/uuid

generated

vendored

Normal file

|

|

@ -0,0 +1,15 @@

|

|||

#!/bin/sh

|

||||

basedir=$(dirname "$(echo "$0" | sed -e 's,\\,/,g')")

|

||||

|

||||

case `uname` in

|

||||

*CYGWIN*) basedir=`cygpath -w "$basedir"`;;

|

||||

esac

|

||||

|

||||

if [ -x "$basedir/node" ]; then

|

||||

"$basedir/node" "$basedir/../uuid/bin/uuid" "$@"

|

||||

ret=$?

|

||||

else

|

||||

node "$basedir/../uuid/bin/uuid" "$@"

|

||||

ret=$?

|

||||

fi

|

||||

exit $ret

|

||||

7

node_modules/.bin/uuid.cmd

generated

vendored

Normal file

7

node_modules/.bin/uuid.cmd

generated

vendored

Normal file

|

|

@ -0,0 +1,7 @@

|

|||

@IF EXIST "%~dp0\node.exe" (

|

||||

"%~dp0\node.exe" "%~dp0\..\uuid\bin\uuid" %*

|

||||

) ELSE (

|

||||

@SETLOCAL

|

||||

@SET PATHEXT=%PATHEXT:;.JS;=;%

|

||||

node "%~dp0\..\uuid\bin\uuid" %*

|

||||

)

|

||||

81

node_modules/@actions/core/README.md

generated

vendored

Normal file

81

node_modules/@actions/core/README.md

generated

vendored

Normal file

|

|

@ -0,0 +1,81 @@

|

|||

# `@actions/core`

|

||||

|

||||

> Core functions for setting results, logging, registering secrets and exporting variables across actions

|

||||

|

||||

## Usage

|

||||

|

||||

#### Inputs/Outputs

|

||||

|

||||

You can use this library to get inputs or set outputs:

|

||||

|

||||

```

|

||||

const core = require('@actions/core');

|

||||

|

||||

const myInput = core.getInput('inputName', { required: true });

|

||||

|

||||

// Do stuff

|

||||

|

||||

core.setOutput('outputKey', 'outputVal');

|

||||

```

|

||||

|

||||

#### Exporting variables/secrets

|

||||

|

||||

You can also export variables and secrets for future steps. Variables get set in the environment automatically, while secrets must be scoped into the environment from a workflow using `{{ secret.FOO }}`. Secrets will also be masked from the logs:

|

||||

|

||||

```

|

||||

const core = require('@actions/core');

|

||||

|

||||

// Do stuff

|

||||

|

||||

core.exportVariable('envVar', 'Val');

|

||||

core.exportSecret('secretVar', variableWithSecretValue);

|

||||

```

|

||||

|

||||

#### PATH Manipulation

|

||||

|

||||

You can explicitly add items to the path for all remaining steps in a workflow:

|

||||

|

||||

```

|

||||

const core = require('@actions/core');

|

||||

|

||||

core.addPath('pathToTool');

|

||||

```

|

||||

|

||||

#### Exit codes

|

||||

|

||||

You should use this library to set the failing exit code for your action:

|

||||

|

||||

```

|

||||

const core = require('@actions/core');

|

||||

|

||||

try {

|

||||

// Do stuff

|

||||

}

|

||||

catch (err) {

|

||||

// setFailed logs the message and sets a failing exit code

|

||||

core.setFailed(`Action failed with error ${err}`);

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

#### Logging

|

||||

|

||||

Finally, this library provides some utilities for logging:

|

||||

|

||||

```

|

||||

const core = require('@actions/core');

|

||||

|

||||

const myInput = core.getInput('input');

|

||||

try {

|

||||

core.debug('Inside try block');

|

||||

|

||||

if (!myInput) {

|

||||

core.warning('myInput wasnt set');

|

||||

}

|

||||

|

||||

// Do stuff

|

||||

}

|

||||

catch (err) {

|

||||

core.error('Error ${err}, action may still succeed though');

|

||||

}

|

||||

```

|

||||

16

node_modules/@actions/core/lib/command.d.ts

generated

vendored

Normal file

16

node_modules/@actions/core/lib/command.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1,16 @@

|

|||

interface CommandProperties {

|

||||

[key: string]: string;

|

||||

}

|

||||

/**

|

||||

* Commands

|

||||

*

|

||||

* Command Format:

|

||||

* ##[name key=value;key=value]message

|

||||

*

|

||||

* Examples:

|

||||

* ##[warning]This is the user warning message

|

||||

* ##[set-secret name=mypassword]definatelyNotAPassword!

|

||||

*/

|

||||

export declare function issueCommand(command: string, properties: CommandProperties, message: string): void;

|

||||

export declare function issue(name: string, message: string): void;

|

||||

export {};

|

||||

66

node_modules/@actions/core/lib/command.js

generated

vendored

Normal file

66

node_modules/@actions/core/lib/command.js

generated

vendored

Normal file

|

|

@ -0,0 +1,66 @@

|

|||

"use strict";

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

const os = require("os");

|

||||

/**

|

||||

* Commands

|

||||

*

|

||||

* Command Format:

|

||||

* ##[name key=value;key=value]message

|

||||

*

|

||||

* Examples:

|

||||

* ##[warning]This is the user warning message

|

||||

* ##[set-secret name=mypassword]definatelyNotAPassword!

|

||||

*/

|

||||

function issueCommand(command, properties, message) {

|

||||

const cmd = new Command(command, properties, message);

|

||||

process.stdout.write(cmd.toString() + os.EOL);

|

||||

}

|

||||

exports.issueCommand = issueCommand;

|

||||

function issue(name, message) {

|

||||

issueCommand(name, {}, message);

|

||||

}

|

||||

exports.issue = issue;

|

||||

const CMD_PREFIX = '##[';

|

||||

class Command {

|

||||

constructor(command, properties, message) {

|

||||

if (!command) {

|

||||

command = 'missing.command';

|

||||

}

|

||||

this.command = command;

|

||||

this.properties = properties;

|

||||

this.message = message;

|

||||

}

|

||||

toString() {

|

||||

let cmdStr = CMD_PREFIX + this.command;

|

||||

if (this.properties && Object.keys(this.properties).length > 0) {

|

||||

cmdStr += ' ';

|

||||

for (const key in this.properties) {

|

||||

if (this.properties.hasOwnProperty(key)) {

|

||||

const val = this.properties[key];

|

||||

if (val) {

|

||||

// safely append the val - avoid blowing up when attempting to

|

||||

// call .replace() if message is not a string for some reason

|

||||

cmdStr += `${key}=${escape(`${val || ''}`)};`;

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

cmdStr += ']';

|

||||

// safely append the message - avoid blowing up when attempting to

|

||||

// call .replace() if message is not a string for some reason

|

||||

const message = `${this.message || ''}`;

|

||||

cmdStr += escapeData(message);

|

||||

return cmdStr;

|

||||

}

|

||||

}

|

||||

function escapeData(s) {

|

||||

return s.replace(/\r/g, '%0D').replace(/\n/g, '%0A');

|

||||

}

|

||||

function escape(s) {

|

||||

return s

|

||||

.replace(/\r/g, '%0D')

|

||||

.replace(/\n/g, '%0A')

|

||||

.replace(/]/g, '%5D')

|

||||

.replace(/;/g, '%3B');

|

||||

}

|

||||

//# sourceMappingURL=command.js.map

|

||||

1

node_modules/@actions/core/lib/command.js.map

generated

vendored

Normal file

1

node_modules/@actions/core/lib/command.js.map

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

{"version":3,"file":"command.js","sourceRoot":"","sources":["../src/command.ts"],"names":[],"mappings":";;AAAA,yBAAwB;AAQxB;;;;;;;;;GASG;AACH,SAAgB,YAAY,CAC1B,OAAe,EACf,UAA6B,EAC7B,OAAe;IAEf,MAAM,GAAG,GAAG,IAAI,OAAO,CAAC,OAAO,EAAE,UAAU,EAAE,OAAO,CAAC,CAAA;IACrD,OAAO,CAAC,MAAM,CAAC,KAAK,CAAC,GAAG,CAAC,QAAQ,EAAE,GAAG,EAAE,CAAC,GAAG,CAAC,CAAA;AAC/C,CAAC;AAPD,oCAOC;AAED,SAAgB,KAAK,CAAC,IAAY,EAAE,OAAe;IACjD,YAAY,CAAC,IAAI,EAAE,EAAE,EAAE,OAAO,CAAC,CAAA;AACjC,CAAC;AAFD,sBAEC;AAED,MAAM,UAAU,GAAG,KAAK,CAAA;AAExB,MAAM,OAAO;IAKX,YAAY,OAAe,EAAE,UAA6B,EAAE,OAAe;QACzE,IAAI,CAAC,OAAO,EAAE;YACZ,OAAO,GAAG,iBAAiB,CAAA;SAC5B;QAED,IAAI,CAAC,OAAO,GAAG,OAAO,CAAA;QACtB,IAAI,CAAC,UAAU,GAAG,UAAU,CAAA;QAC5B,IAAI,CAAC,OAAO,GAAG,OAAO,CAAA;IACxB,CAAC;IAED,QAAQ;QACN,IAAI,MAAM,GAAG,UAAU,GAAG,IAAI,CAAC,OAAO,CAAA;QAEtC,IAAI,IAAI,CAAC,UAAU,IAAI,MAAM,CAAC,IAAI,CAAC,IAAI,CAAC,UAAU,CAAC,CAAC,MAAM,GAAG,CAAC,EAAE;YAC9D,MAAM,IAAI,GAAG,CAAA;YACb,KAAK,MAAM,GAAG,IAAI,IAAI,CAAC,UAAU,EAAE;gBACjC,IAAI,IAAI,CAAC,UAAU,CAAC,cAAc,CAAC,GAAG,CAAC,EAAE;oBACvC,MAAM,GAAG,GAAG,IAAI,CAAC,UAAU,CAAC,GAAG,CAAC,CAAA;oBAChC,IAAI,GAAG,EAAE;wBACP,8DAA8D;wBAC9D,6DAA6D;wBAC7D,MAAM,IAAI,GAAG,GAAG,IAAI,MAAM,CAAC,GAAG,GAAG,IAAI,EAAE,EAAE,CAAC,GAAG,CAAA;qBAC9C;iBACF;aACF;SACF;QAED,MAAM,IAAI,GAAG,CAAA;QAEb,kEAAkE;QAClE,6DAA6D;QAC7D,MAAM,OAAO,GAAG,GAAG,IAAI,CAAC,OAAO,IAAI,EAAE,EAAE,CAAA;QACvC,MAAM,IAAI,UAAU,CAAC,OAAO,CAAC,CAAA;QAE7B,OAAO,MAAM,CAAA;IACf,CAAC;CACF;AAED,SAAS,UAAU,CAAC,CAAS;IAC3B,OAAO,CAAC,CAAC,OAAO,CAAC,KAAK,EAAE,KAAK,CAAC,CAAC,OAAO,CAAC,KAAK,EAAE,KAAK,CAAC,CAAA;AACtD,CAAC;AAED,SAAS,MAAM,CAAC,CAAS;IACvB,OAAO,CAAC;SACL,OAAO,CAAC,KAAK,EAAE,KAAK,CAAC;SACrB,OAAO,CAAC,KAAK,EAAE,KAAK,CAAC;SACrB,OAAO,CAAC,IAAI,EAAE,KAAK,CAAC;SACpB,OAAO,CAAC,IAAI,EAAE,KAAK,CAAC,CAAA;AACzB,CAAC"}

|

||||

73

node_modules/@actions/core/lib/core.d.ts

generated

vendored

Normal file

73

node_modules/@actions/core/lib/core.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1,73 @@

|

|||

/**

|

||||

* Interface for getInput options

|

||||

*/

|

||||

export interface InputOptions {

|

||||

/** Optional. Whether the input is required. If required and not present, will throw. Defaults to false */

|

||||

required?: boolean;

|

||||

}

|

||||

/**

|

||||

* The code to exit an action

|

||||

*/

|

||||

export declare enum ExitCode {

|

||||

/**

|

||||

* A code indicating that the action was successful

|

||||

*/

|

||||

Success = 0,

|

||||

/**

|

||||

* A code indicating that the action was a failure

|

||||

*/

|

||||

Failure = 1

|

||||

}

|

||||

/**

|

||||

* sets env variable for this action and future actions in the job

|

||||

* @param name the name of the variable to set

|

||||

* @param val the value of the variable

|

||||

*/

|

||||

export declare function exportVariable(name: string, val: string): void;

|

||||

/**

|

||||

* exports the variable and registers a secret which will get masked from logs

|

||||

* @param name the name of the variable to set

|

||||

* @param val value of the secret

|

||||

*/

|

||||

export declare function exportSecret(name: string, val: string): void;

|

||||

/**

|

||||

* Prepends inputPath to the PATH (for this action and future actions)

|

||||

* @param inputPath

|

||||

*/

|

||||

export declare function addPath(inputPath: string): void;

|

||||

/**

|

||||

* Gets the value of an input. The value is also trimmed.

|

||||

*

|

||||

* @param name name of the input to get

|

||||

* @param options optional. See InputOptions.

|

||||

* @returns string

|

||||

*/

|

||||

export declare function getInput(name: string, options?: InputOptions): string;

|

||||

/**

|

||||

* Sets the value of an output.

|

||||

*

|

||||

* @param name name of the output to set

|

||||

* @param value value to store

|

||||

*/

|

||||

export declare function setOutput(name: string, value: string): void;

|

||||

/**

|

||||

* Sets the action status to failed.

|

||||

* When the action exits it will be with an exit code of 1

|

||||

* @param message add error issue message

|

||||

*/

|

||||

export declare function setFailed(message: string): void;

|

||||

/**

|

||||

* Writes debug message to user log

|

||||

* @param message debug message

|

||||

*/

|

||||

export declare function debug(message: string): void;

|

||||

/**

|

||||

* Adds an error issue

|

||||

* @param message error issue message

|

||||

*/

|

||||

export declare function error(message: string): void;

|

||||

/**

|

||||

* Adds an warning issue

|

||||

* @param message warning issue message

|

||||

*/

|

||||

export declare function warning(message: string): void;

|

||||

116

node_modules/@actions/core/lib/core.js

generated

vendored

Normal file

116

node_modules/@actions/core/lib/core.js

generated

vendored

Normal file

|

|

@ -0,0 +1,116 @@

|

|||

"use strict";

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

const command_1 = require("./command");

|

||||

const path = require("path");

|

||||

/**

|

||||

* The code to exit an action

|

||||

*/

|

||||

var ExitCode;

|

||||

(function (ExitCode) {

|

||||

/**

|

||||

* A code indicating that the action was successful

|

||||

*/

|

||||

ExitCode[ExitCode["Success"] = 0] = "Success";

|

||||

/**

|

||||

* A code indicating that the action was a failure

|

||||

*/

|

||||

ExitCode[ExitCode["Failure"] = 1] = "Failure";

|

||||

})(ExitCode = exports.ExitCode || (exports.ExitCode = {}));

|

||||

//-----------------------------------------------------------------------

|

||||

// Variables

|

||||

//-----------------------------------------------------------------------

|

||||

/**

|

||||

* sets env variable for this action and future actions in the job

|

||||

* @param name the name of the variable to set

|

||||

* @param val the value of the variable

|

||||

*/

|

||||

function exportVariable(name, val) {

|

||||

process.env[name] = val;

|

||||

command_1.issueCommand('set-env', { name }, val);

|

||||

}

|

||||

exports.exportVariable = exportVariable;

|

||||

/**

|

||||

* exports the variable and registers a secret which will get masked from logs

|

||||

* @param name the name of the variable to set

|

||||

* @param val value of the secret

|

||||

*/

|

||||

function exportSecret(name, val) {

|

||||

exportVariable(name, val);

|

||||

command_1.issueCommand('set-secret', {}, val);

|

||||

}

|

||||

exports.exportSecret = exportSecret;

|

||||

/**

|

||||

* Prepends inputPath to the PATH (for this action and future actions)

|

||||

* @param inputPath

|

||||

*/

|

||||

function addPath(inputPath) {

|

||||

command_1.issueCommand('add-path', {}, inputPath);

|

||||

process.env['PATH'] = `${inputPath}${path.delimiter}${process.env['PATH']}`;

|

||||

}

|

||||

exports.addPath = addPath;

|

||||

/**

|

||||

* Gets the value of an input. The value is also trimmed.

|

||||

*

|

||||

* @param name name of the input to get

|

||||

* @param options optional. See InputOptions.

|

||||

* @returns string

|

||||

*/

|

||||

function getInput(name, options) {

|

||||

const val = process.env[`INPUT_${name.replace(' ', '_').toUpperCase()}`] || '';

|

||||

if (options && options.required && !val) {

|

||||

throw new Error(`Input required and not supplied: ${name}`);

|

||||

}

|

||||

return val.trim();

|

||||

}

|

||||

exports.getInput = getInput;

|

||||

/**

|

||||

* Sets the value of an output.

|

||||

*

|

||||

* @param name name of the output to set

|

||||

* @param value value to store

|

||||

*/

|

||||

function setOutput(name, value) {

|

||||

command_1.issueCommand('set-output', { name }, value);

|

||||

}

|

||||

exports.setOutput = setOutput;

|

||||

//-----------------------------------------------------------------------

|

||||

// Results

|

||||

//-----------------------------------------------------------------------

|

||||

/**

|

||||

* Sets the action status to failed.

|

||||

* When the action exits it will be with an exit code of 1

|

||||

* @param message add error issue message

|

||||

*/

|

||||

function setFailed(message) {

|

||||

process.exitCode = ExitCode.Failure;

|

||||

error(message);

|

||||

}

|

||||

exports.setFailed = setFailed;

|

||||

//-----------------------------------------------------------------------

|

||||

// Logging Commands

|

||||

//-----------------------------------------------------------------------

|

||||

/**

|

||||

* Writes debug message to user log

|

||||

* @param message debug message

|

||||

*/

|

||||

function debug(message) {

|

||||

command_1.issueCommand('debug', {}, message);

|

||||

}

|

||||

exports.debug = debug;

|

||||

/**

|

||||

* Adds an error issue

|

||||

* @param message error issue message

|

||||

*/

|

||||

function error(message) {

|

||||

command_1.issue('error', message);

|

||||

}

|

||||

exports.error = error;

|

||||

/**

|

||||

* Adds an warning issue

|

||||

* @param message warning issue message

|

||||

*/

|

||||

function warning(message) {

|

||||

command_1.issue('warning', message);

|

||||

}

|

||||

exports.warning = warning;

|

||||

//# sourceMappingURL=core.js.map

|

||||

1

node_modules/@actions/core/lib/core.js.map

generated

vendored

Normal file

1

node_modules/@actions/core/lib/core.js.map

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

{"version":3,"file":"core.js","sourceRoot":"","sources":["../src/core.ts"],"names":[],"mappings":";;AAAA,uCAA6C;AAE7C,6BAA4B;AAU5B;;GAEG;AACH,IAAY,QAUX;AAVD,WAAY,QAAQ;IAClB;;OAEG;IACH,6CAAW,CAAA;IAEX;;OAEG;IACH,6CAAW,CAAA;AACb,CAAC,EAVW,QAAQ,GAAR,gBAAQ,KAAR,gBAAQ,QAUnB;AAED,yEAAyE;AACzE,YAAY;AACZ,yEAAyE;AAEzE;;;;GAIG;AACH,SAAgB,cAAc,CAAC,IAAY,EAAE,GAAW;IACtD,OAAO,CAAC,GAAG,CAAC,IAAI,CAAC,GAAG,GAAG,CAAA;IACvB,sBAAY,CAAC,SAAS,EAAE,EAAC,IAAI,EAAC,EAAE,GAAG,CAAC,CAAA;AACtC,CAAC;AAHD,wCAGC;AAED;;;;GAIG;AACH,SAAgB,YAAY,CAAC,IAAY,EAAE,GAAW;IACpD,cAAc,CAAC,IAAI,EAAE,GAAG,CAAC,CAAA;IACzB,sBAAY,CAAC,YAAY,EAAE,EAAE,EAAE,GAAG,CAAC,CAAA;AACrC,CAAC;AAHD,oCAGC;AAED;;;GAGG;AACH,SAAgB,OAAO,CAAC,SAAiB;IACvC,sBAAY,CAAC,UAAU,EAAE,EAAE,EAAE,SAAS,CAAC,CAAA;IACvC,OAAO,CAAC,GAAG,CAAC,MAAM,CAAC,GAAG,GAAG,SAAS,GAAG,IAAI,CAAC,SAAS,GAAG,OAAO,CAAC,GAAG,CAAC,MAAM,CAAC,EAAE,CAAA;AAC7E,CAAC;AAHD,0BAGC;AAED;;;;;;GAMG;AACH,SAAgB,QAAQ,CAAC,IAAY,EAAE,OAAsB;IAC3D,MAAM,GAAG,GACP,OAAO,CAAC,GAAG,CAAC,SAAS,IAAI,CAAC,OAAO,CAAC,GAAG,EAAE,GAAG,CAAC,CAAC,WAAW,EAAE,EAAE,CAAC,IAAI,EAAE,CAAA;IACpE,IAAI,OAAO,IAAI,OAAO,CAAC,QAAQ,IAAI,CAAC,GAAG,EAAE;QACvC,MAAM,IAAI,KAAK,CAAC,oCAAoC,IAAI,EAAE,CAAC,CAAA;KAC5D;IAED,OAAO,GAAG,CAAC,IAAI,EAAE,CAAA;AACnB,CAAC;AARD,4BAQC;AAED;;;;;GAKG;AACH,SAAgB,SAAS,CAAC,IAAY,EAAE,KAAa;IACnD,sBAAY,CAAC,YAAY,EAAE,EAAC,IAAI,EAAC,EAAE,KAAK,CAAC,CAAA;AAC3C,CAAC;AAFD,8BAEC;AAED,yEAAyE;AACzE,UAAU;AACV,yEAAyE;AAEzE;;;;GAIG;AACH,SAAgB,SAAS,CAAC,OAAe;IACvC,OAAO,CAAC,QAAQ,GAAG,QAAQ,CAAC,OAAO,CAAA;IACnC,KAAK,CAAC,OAAO,CAAC,CAAA;AAChB,CAAC;AAHD,8BAGC;AAED,yEAAyE;AACzE,mBAAmB;AACnB,yEAAyE;AAEzE;;;GAGG;AACH,SAAgB,KAAK,CAAC,OAAe;IACnC,sBAAY,CAAC,OAAO,EAAE,EAAE,EAAE,OAAO,CAAC,CAAA;AACpC,CAAC;AAFD,sBAEC;AAED;;;GAGG;AACH,SAAgB,KAAK,CAAC,OAAe;IACnC,eAAK,CAAC,OAAO,EAAE,OAAO,CAAC,CAAA;AACzB,CAAC;AAFD,sBAEC;AAED;;;GAGG;AACH,SAAgB,OAAO,CAAC,OAAe;IACrC,eAAK,CAAC,SAAS,EAAE,OAAO,CAAC,CAAA;AAC3B,CAAC;AAFD,0BAEC"}

|

||||

64

node_modules/@actions/core/package.json

generated

vendored

Normal file

64

node_modules/@actions/core/package.json

generated

vendored

Normal file

|

|

@ -0,0 +1,64 @@

|

|||

{

|

||||

"_from": "@actions/core@^1.0.0",

|

||||

"_id": "@actions/core@1.0.0",

|

||||

"_inBundle": false,

|

||||

"_integrity": "sha512-aMIlkx96XH4E/2YZtEOeyrYQfhlas9jIRkfGPqMwXD095Rdkzo4lB6ZmbxPQSzD+e1M+Xsm98ZhuSMYGv/AlqA==",

|

||||

"_location": "/@actions/core",

|

||||

"_phantomChildren": {},

|

||||

"_requested": {

|

||||

"type": "range",

|

||||

"registry": true,

|

||||

"raw": "@actions/core@^1.0.0",

|

||||

"name": "@actions/core",

|

||||

"escapedName": "@actions%2fcore",

|

||||

"scope": "@actions",

|

||||

"rawSpec": "^1.0.0",

|

||||

"saveSpec": null,

|

||||

"fetchSpec": "^1.0.0"

|

||||

},

|

||||

"_requiredBy": [

|

||||

"/",

|

||||

"/@actions/tool-cache"

|

||||

],

|

||||

"_resolved": "https://registry.npmjs.org/@actions/core/-/core-1.0.0.tgz",

|

||||

"_shasum": "4a090a2e958cc300b9ea802331034d5faf42d239",

|

||||

"_spec": "@actions/core@^1.0.0",

|

||||

"_where": "C:\\Users\\damccorm\\Documents\\setup-python",

|

||||

"bugs": {

|

||||

"url": "https://github.com/actions/toolkit/issues"

|

||||

},

|

||||

"bundleDependencies": false,

|

||||

"deprecated": false,

|

||||

"description": "Actions core lib",

|

||||

"devDependencies": {

|

||||

"@types/node": "^12.0.2"

|

||||

},

|

||||

"directories": {

|

||||

"lib": "lib",

|

||||

"test": "__tests__"

|

||||

},

|

||||

"files": [

|

||||

"lib"

|

||||

],

|

||||

"gitHead": "a40bce7c8d382aa3dbadaa327acbc696e9390e55",

|

||||

"homepage": "https://github.com/actions/toolkit/tree/master/packages/core",

|

||||

"keywords": [

|

||||

"core",

|

||||

"actions"

|

||||

],

|

||||

"license": "MIT",

|

||||

"main": "lib/core.js",

|

||||

"name": "@actions/core",

|

||||

"publishConfig": {

|

||||

"access": "public"

|

||||

},

|

||||

"repository": {

|

||||

"type": "git",

|

||||

"url": "git+https://github.com/actions/toolkit.git"

|

||||

},

|

||||

"scripts": {

|

||||

"test": "echo \"Error: run tests from root\" && exit 1",

|

||||

"tsc": "tsc"

|

||||

},

|

||||

"version": "1.0.0"

|

||||

}

|

||||

60

node_modules/@actions/exec/README.md

generated

vendored

Normal file

60

node_modules/@actions/exec/README.md

generated

vendored

Normal file

|

|

@ -0,0 +1,60 @@

|

|||

# `@actions/exec`

|

||||

|

||||

## Usage

|

||||

|

||||

#### Basic

|

||||

|

||||

You can use this package to execute your tools on the command line in a cross platform way:

|

||||

|

||||

```

|

||||

const exec = require('@actions/exec');

|

||||

|

||||

await exec.exec('node index.js');

|

||||

```

|

||||

|

||||

#### Args

|

||||

|

||||

You can also pass in arg arrays:

|

||||

|

||||

```

|

||||

const exec = require('@actions/exec');

|

||||

|

||||

await exec.exec('node', ['index.js', 'foo=bar']);

|

||||

```

|

||||

|

||||

#### Output/options

|

||||

|

||||

Capture output or specify [other options](https://github.com/actions/toolkit/blob/d9347d4ab99fd507c0b9104b2cf79fb44fcc827d/packages/exec/src/interfaces.ts#L5):

|

||||

|

||||

```

|

||||

const exec = require('@actions/exec');

|

||||

|

||||

const myOutput = '';

|

||||

const myError = '';

|

||||

|

||||

const options = {};

|

||||

options.listeners = {

|

||||

stdout: (data: Buffer) => {

|

||||

myOutput += data.toString();

|

||||

},

|

||||

stderr: (data: Buffer) => {

|

||||

myError += data.toString();

|

||||

}

|

||||

};

|

||||

options.cwd = './lib';

|

||||

|

||||

await exec.exec('node', ['index.js', 'foo=bar'], options);

|

||||

```

|

||||

|

||||

#### Exec tools not in the PATH

|

||||

|

||||

You can use it in conjunction with the `which` function from `@actions/io` to execute tools that are not in the PATH:

|

||||

|

||||

```

|

||||

const exec = require('@actions/exec');

|

||||

const io = require('@actions/io');

|

||||

|

||||

const pythonPath: string = await io.which('python', true)

|

||||

|

||||

await exec.exec(`"${pythonPath}"`, ['main.py']);

|

||||

```

|

||||

12

node_modules/@actions/exec/lib/exec.d.ts

generated

vendored

Normal file

12

node_modules/@actions/exec/lib/exec.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1,12 @@

|

|||

import * as im from './interfaces';

|

||||

/**

|

||||

* Exec a command.

|

||||

* Output will be streamed to the live console.

|

||||

* Returns promise with return code

|

||||

*

|

||||

* @param commandLine command to execute (can include additional args). Must be correctly escaped.

|

||||

* @param args optional arguments for tool. Escaping is handled by the lib.

|

||||

* @param options optional exec options. See ExecOptions

|

||||

* @returns Promise<number> exit code

|

||||

*/

|

||||

export declare function exec(commandLine: string, args?: string[], options?: im.ExecOptions): Promise<number>;

|

||||

36

node_modules/@actions/exec/lib/exec.js

generated

vendored

Normal file

36

node_modules/@actions/exec/lib/exec.js

generated

vendored

Normal file

|

|

@ -0,0 +1,36 @@

|

|||

"use strict";

|

||||

var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) {

|

||||

return new (P || (P = Promise))(function (resolve, reject) {

|

||||

function fulfilled(value) { try { step(generator.next(value)); } catch (e) { reject(e); } }

|

||||

function rejected(value) { try { step(generator["throw"](value)); } catch (e) { reject(e); } }

|

||||

function step(result) { result.done ? resolve(result.value) : new P(function (resolve) { resolve(result.value); }).then(fulfilled, rejected); }

|

||||

step((generator = generator.apply(thisArg, _arguments || [])).next());

|

||||

});

|

||||

};

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

const tr = require("./toolrunner");

|

||||

/**

|

||||

* Exec a command.

|

||||

* Output will be streamed to the live console.

|

||||

* Returns promise with return code

|

||||

*

|

||||

* @param commandLine command to execute (can include additional args). Must be correctly escaped.

|

||||

* @param args optional arguments for tool. Escaping is handled by the lib.

|

||||

* @param options optional exec options. See ExecOptions

|

||||

* @returns Promise<number> exit code

|

||||

*/

|

||||

function exec(commandLine, args, options) {

|

||||

return __awaiter(this, void 0, void 0, function* () {

|

||||

const commandArgs = tr.argStringToArray(commandLine);

|

||||

if (commandArgs.length === 0) {

|

||||

throw new Error(`Parameter 'commandLine' cannot be null or empty.`);

|

||||

}

|

||||

// Path to tool to execute should be first arg

|

||||

const toolPath = commandArgs[0];

|

||||

args = commandArgs.slice(1).concat(args || []);

|

||||

const runner = new tr.ToolRunner(toolPath, args, options);

|

||||

return runner.exec();

|

||||

});

|

||||

}

|

||||

exports.exec = exec;

|

||||

//# sourceMappingURL=exec.js.map

|

||||

1

node_modules/@actions/exec/lib/exec.js.map

generated

vendored

Normal file

1

node_modules/@actions/exec/lib/exec.js.map

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

{"version":3,"file":"exec.js","sourceRoot":"","sources":["../src/exec.ts"],"names":[],"mappings":";;;;;;;;;;AACA,mCAAkC;AAElC;;;;;;;;;GASG;AACH,SAAsB,IAAI,CACxB,WAAmB,EACnB,IAAe,EACf,OAAwB;;QAExB,MAAM,WAAW,GAAG,EAAE,CAAC,gBAAgB,CAAC,WAAW,CAAC,CAAA;QACpD,IAAI,WAAW,CAAC,MAAM,KAAK,CAAC,EAAE;YAC5B,MAAM,IAAI,KAAK,CAAC,kDAAkD,CAAC,CAAA;SACpE;QACD,8CAA8C;QAC9C,MAAM,QAAQ,GAAG,WAAW,CAAC,CAAC,CAAC,CAAA;QAC/B,IAAI,GAAG,WAAW,CAAC,KAAK,CAAC,CAAC,CAAC,CAAC,MAAM,CAAC,IAAI,IAAI,EAAE,CAAC,CAAA;QAC9C,MAAM,MAAM,GAAkB,IAAI,EAAE,CAAC,UAAU,CAAC,QAAQ,EAAE,IAAI,EAAE,OAAO,CAAC,CAAA;QACxE,OAAO,MAAM,CAAC,IAAI,EAAE,CAAA;IACtB,CAAC;CAAA;AAdD,oBAcC"}

|

||||

35

node_modules/@actions/exec/lib/interfaces.d.ts

generated

vendored

Normal file

35

node_modules/@actions/exec/lib/interfaces.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1,35 @@

|

|||

/// <reference types="node" />

|

||||

import * as stream from 'stream';

|

||||

/**

|

||||

* Interface for exec options

|

||||

*/

|

||||

export interface ExecOptions {

|

||||

/** optional working directory. defaults to current */

|

||||

cwd?: string;

|

||||

/** optional envvar dictionary. defaults to current process's env */

|

||||

env?: {

|

||||

[key: string]: string;

|

||||

};

|

||||

/** optional. defaults to false */

|

||||

silent?: boolean;

|

||||

/** optional out stream to use. Defaults to process.stdout */

|

||||

outStream?: stream.Writable;

|

||||

/** optional err stream to use. Defaults to process.stderr */

|

||||

errStream?: stream.Writable;

|

||||

/** optional. whether to skip quoting/escaping arguments if needed. defaults to false. */

|

||||

windowsVerbatimArguments?: boolean;

|

||||

/** optional. whether to fail if output to stderr. defaults to false */

|

||||

failOnStdErr?: boolean;

|

||||

/** optional. defaults to failing on non zero. ignore will not fail leaving it up to the caller */

|

||||

ignoreReturnCode?: boolean;

|

||||

/** optional. How long in ms to wait for STDIO streams to close after the exit event of the process before terminating. defaults to 10000 */

|

||||

delay?: number;

|

||||

/** optional. Listeners for output. Callback functions that will be called on these events */

|

||||

listeners?: {

|

||||

stdout?: (data: Buffer) => void;

|

||||

stderr?: (data: Buffer) => void;

|

||||

stdline?: (data: string) => void;

|

||||

errline?: (data: string) => void;

|

||||

debug?: (data: string) => void;

|

||||

};

|

||||

}

|

||||

3

node_modules/@actions/exec/lib/interfaces.js

generated

vendored

Normal file

3

node_modules/@actions/exec/lib/interfaces.js

generated

vendored

Normal file

|

|

@ -0,0 +1,3 @@

|

|||

"use strict";

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

//# sourceMappingURL=interfaces.js.map

|

||||

1

node_modules/@actions/exec/lib/interfaces.js.map

generated

vendored

Normal file

1

node_modules/@actions/exec/lib/interfaces.js.map

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

{"version":3,"file":"interfaces.js","sourceRoot":"","sources":["../src/interfaces.ts"],"names":[],"mappings":""}

|

||||

37

node_modules/@actions/exec/lib/toolrunner.d.ts

generated

vendored

Normal file

37

node_modules/@actions/exec/lib/toolrunner.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1,37 @@

|

|||

/// <reference types="node" />

|

||||

import * as events from 'events';

|

||||

import * as im from './interfaces';

|

||||

export declare class ToolRunner extends events.EventEmitter {

|

||||

constructor(toolPath: string, args?: string[], options?: im.ExecOptions);

|

||||

private toolPath;

|

||||

private args;

|

||||

private options;

|

||||

private _debug;

|

||||

private _getCommandString;

|

||||

private _processLineBuffer;

|

||||

private _getSpawnFileName;

|

||||

private _getSpawnArgs;

|

||||

private _endsWith;

|

||||

private _isCmdFile;

|

||||

private _windowsQuoteCmdArg;

|

||||

private _uvQuoteCmdArg;

|

||||

private _cloneExecOptions;

|

||||

private _getSpawnOptions;

|

||||

/**

|

||||

* Exec a tool.

|

||||

* Output will be streamed to the live console.

|

||||

* Returns promise with return code

|

||||

*

|

||||

* @param tool path to tool to exec

|

||||

* @param options optional exec options. See ExecOptions

|

||||

* @returns number

|

||||

*/

|

||||

exec(): Promise<number>;

|

||||

}

|

||||

/**

|

||||

* Convert an arg string to an array of args. Handles escaping

|

||||

*

|

||||

* @param argString string of arguments

|

||||

* @returns string[] array of arguments

|

||||

*/

|

||||

export declare function argStringToArray(argString: string): string[];

|

||||

573

node_modules/@actions/exec/lib/toolrunner.js

generated

vendored

Normal file

573

node_modules/@actions/exec/lib/toolrunner.js

generated

vendored

Normal file

|

|

@ -0,0 +1,573 @@

|

|||

"use strict";

|

||||

var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) {

|

||||

return new (P || (P = Promise))(function (resolve, reject) {

|

||||

function fulfilled(value) { try { step(generator.next(value)); } catch (e) { reject(e); } }

|

||||

function rejected(value) { try { step(generator["throw"](value)); } catch (e) { reject(e); } }

|

||||

function step(result) { result.done ? resolve(result.value) : new P(function (resolve) { resolve(result.value); }).then(fulfilled, rejected); }

|

||||

step((generator = generator.apply(thisArg, _arguments || [])).next());

|

||||

});

|

||||

};

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

const os = require("os");

|

||||

const events = require("events");

|

||||

const child = require("child_process");

|

||||

/* eslint-disable @typescript-eslint/unbound-method */

|

||||

const IS_WINDOWS = process.platform === 'win32';

|

||||

/*

|

||||

* Class for running command line tools. Handles quoting and arg parsing in a platform agnostic way.

|

||||

*/

|

||||

class ToolRunner extends events.EventEmitter {

|

||||

constructor(toolPath, args, options) {

|

||||

super();

|

||||

if (!toolPath) {

|

||||

throw new Error("Parameter 'toolPath' cannot be null or empty.");

|

||||

}

|

||||

this.toolPath = toolPath;

|

||||

this.args = args || [];

|

||||

this.options = options || {};

|

||||

}

|

||||

_debug(message) {

|

||||

if (this.options.listeners && this.options.listeners.debug) {

|

||||

this.options.listeners.debug(message);

|

||||

}

|

||||

}

|

||||

_getCommandString(options, noPrefix) {

|

||||

const toolPath = this._getSpawnFileName();

|

||||

const args = this._getSpawnArgs(options);

|

||||

let cmd = noPrefix ? '' : '[command]'; // omit prefix when piped to a second tool

|

||||

if (IS_WINDOWS) {

|

||||

// Windows + cmd file

|

||||

if (this._isCmdFile()) {

|

||||

cmd += toolPath;

|

||||

for (const a of args) {

|

||||

cmd += ` ${a}`;

|

||||

}

|

||||

}

|

||||

// Windows + verbatim

|

||||

else if (options.windowsVerbatimArguments) {

|

||||

cmd += `"${toolPath}"`;

|

||||

for (const a of args) {

|

||||

cmd += ` ${a}`;

|

||||

}

|

||||

}

|

||||

// Windows (regular)

|

||||

else {

|

||||

cmd += this._windowsQuoteCmdArg(toolPath);

|

||||

for (const a of args) {

|

||||

cmd += ` ${this._windowsQuoteCmdArg(a)}`;

|

||||

}

|

||||

}

|

||||

}

|

||||

else {

|

||||

// OSX/Linux - this can likely be improved with some form of quoting.

|

||||

// creating processes on Unix is fundamentally different than Windows.

|

||||

// on Unix, execvp() takes an arg array.

|

||||

cmd += toolPath;

|

||||

for (const a of args) {

|

||||

cmd += ` ${a}`;

|

||||

}

|

||||

}

|

||||

return cmd;

|

||||

}

|

||||

_processLineBuffer(data, strBuffer, onLine) {

|

||||

try {

|

||||

let s = strBuffer + data.toString();

|

||||

let n = s.indexOf(os.EOL);

|

||||

while (n > -1) {

|

||||

const line = s.substring(0, n);

|

||||

onLine(line);

|

||||

// the rest of the string ...

|

||||

s = s.substring(n + os.EOL.length);

|

||||

n = s.indexOf(os.EOL);

|

||||

}

|

||||

strBuffer = s;

|

||||

}

|

||||

catch (err) {

|

||||

// streaming lines to console is best effort. Don't fail a build.

|

||||

this._debug(`error processing line. Failed with error ${err}`);

|

||||

}

|

||||

}

|